GRcon25 CTF Challenges (spoilers)

Capture the flag (CTF) has always been my favorite part of GRCon -- not only is it a ton of fun to hack away at puzzles, but it is a great way to gain valuable experience in working with the various software tools used in DSP and Communications. When I was teaching, I would often repurpose these challenges as projects and/or exams for my students! However, due to unavoidable circumstances, our CTF was in danger of being cancelled this year. I had some extra bandwidth, as I was transitioning between jobs -- and I was already planning on being at the conference, so I volunteered to organize the event. For the past several years, @argilo has run an impeccable event, so the bar was high! Thanks to some hard work and fantastic submissions from a number of people, I think the event was a great success! Special thanks to Gary Schafer for building All the Same Color, Never the Same Color, Please Ask Later, and Signal Identification part 2.

Always the Same Color

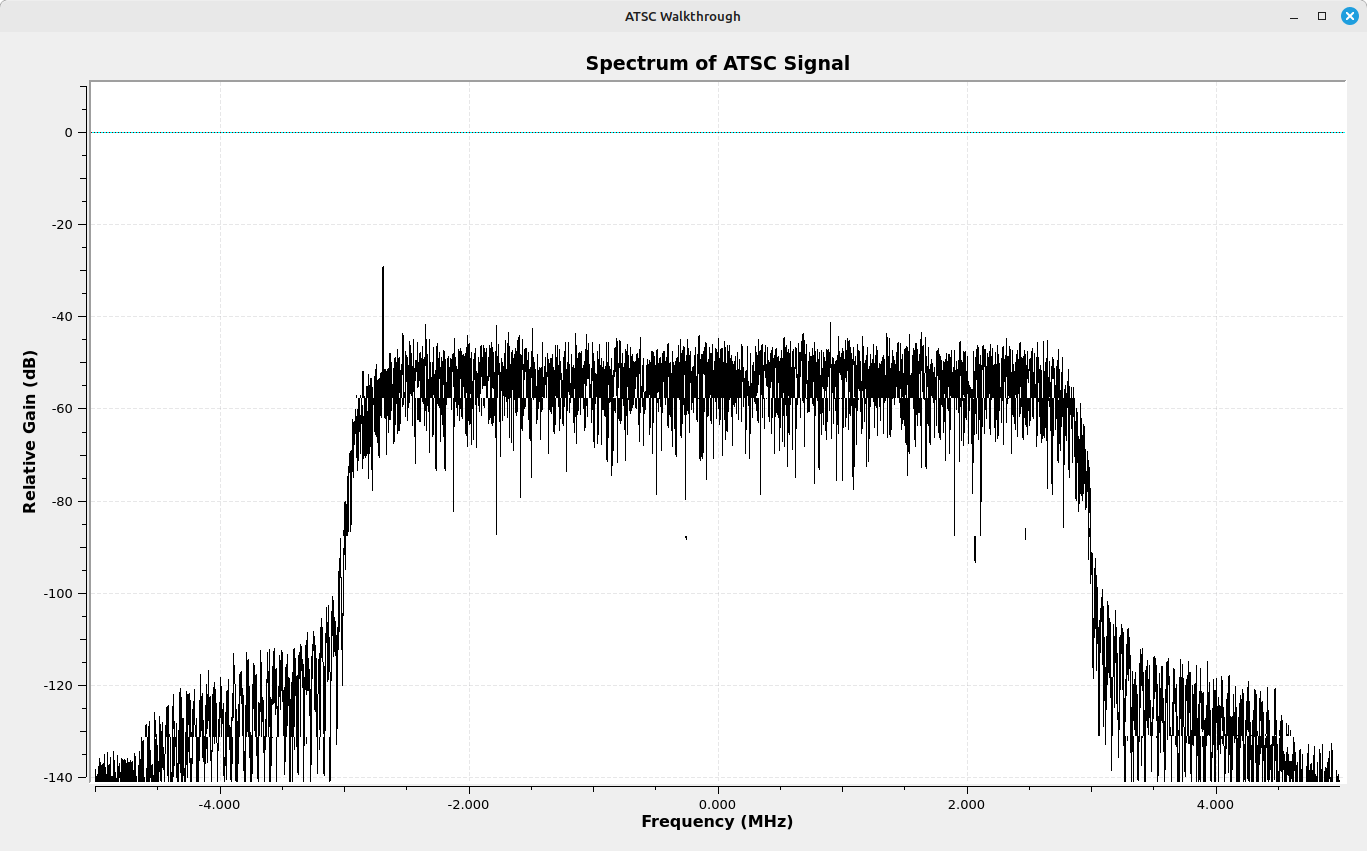

This challenge consists of a spectrum that is 10 MHz wide. In the center is a flat-topped signal with a spectral line on the lower end. The spectrum and the spectral line indicate an Advanced Television Systems Committee (ATSC) digitally-modulated carrier. Specifically, ATSC Ver 1.

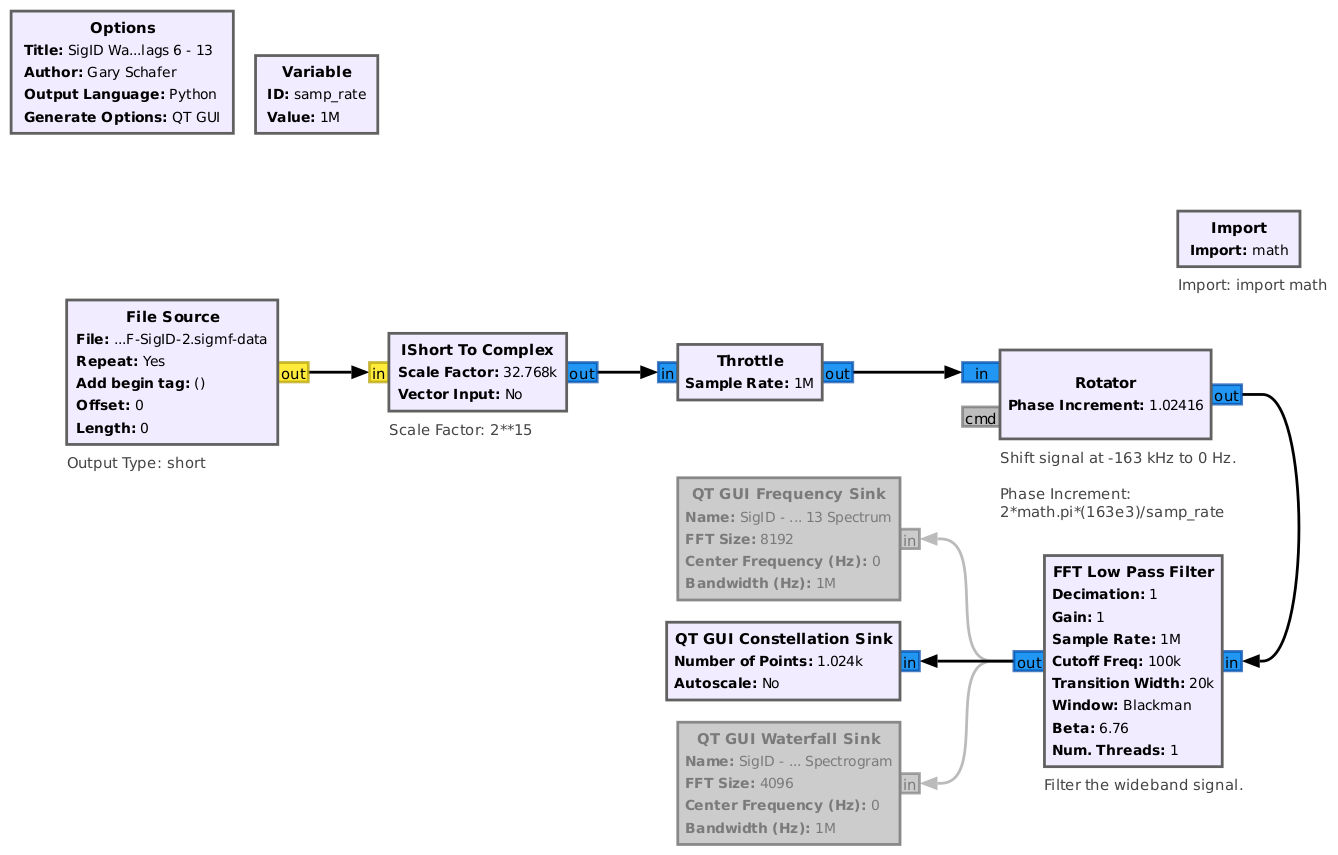

GNURadio has a block that can be used to demodulate this type of signal, specifically the ATSC Receive Pipeline block. The following flowgraph incorporates this block:

Using the method above (saving the transport stream as a .ts file) and using readily-available software (VLC, Celluloid, SMPlayer), playing the file provides the following initial screen (using SMPlayer on Linux):

One of the flags is clearly visible in video track 1 of the .TS file. Note that transport streams are simply "vessels" that can hold multiple audio and video tracks. Using your chosen video player, you should have the option to look at separate audio and video tracks. The second audio track is simply a nice little Rick Roll (you're welcome). The video, while also part of the Rick Roll, contains flag 2 in the visible area of the right side of the screen.

The last two flags are a bit trickier. One of the clues provided is "pay attention to all that you see and hear". The "hear" part is the most important. An extra hint provided (assuming you were willing to accept the cost in points) was, "Your hearing only goes up to about 15 kHz. The sample rate for ATSC audio can be up to 48 kHz. Process accordingly." If the sample rate is 48 kHz, then the maximum frequency of that signal can be up to 24 kHz, though with filtering the resulting is typically about 80% of that, or about 20 kHz. That leaves about 5 kHz for bad actors to play with. Like me. In this

There are a few ways to access this extra audio:

1. Play the audio track while recording with a record-what-you-hear program. NOTE: This really needs to be a uncompressed format. Recording in MP3 or a screen capture using MP4, such as is used by OBS Studio, will typically damage, if not outright destroy, this extra audio information.

2. Record the audio (again, in uncompressed format) using an audio recorder with the audio being played by your video player or by using a transmit-capable SDR to transmit the ATSC signal to an ATSC-ready TV. (NOTE: This was tested using an older Toshiba, and it actually worked. The recorder was an Android tablet with a standard audio recording program.)

3. Use the command-line program "ffmpeg" to extract the audio tracks from the .ts file. THIS IS PROBABLY THE BEST METHOD. ffmpeg will extract the audio straight to a .WAV file (uncompressed) where it can be manipulated with Gnu Radio or any of a host of audio processing programs. ffmpeg also provides a command to see all the available tracks in an audio/ video file. Running the command for our .ts file provides the following:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

Input #0, mpegts, from 'ATSC-signal.ts':

Duration: 19:53:18.22, start: 23862.929422, bitrate: 4 kb/s

Program 1

Metadata:

service_name : Service01

service_provider: FFmpeg

Stream #0:0[0x100]: Video: mpeg2video (Main) ([2][0][0][0] / 0x0002), yuv420p(tv, bt470bg/unknown/unknown, progressive), 1920x1080 [SAR 1:1 DAR 16:9], 30 fps, 30 tbr, 90k tbn, 60 tbc

Side data:

cpb: bitrate max/min/avg: 0/0/0 buffer size: 1425408 vbv_delay: N/A

Stream #0:1[0x101](und): Audio: ac3 (AC-3 / 0x332D4341), 48000 Hz, mono, fltp, 640 kb/s

Stream #0:2[0x102]: Video: mpeg2video (Main) ([2][0][0][0] / 0x0002), yuv420p(tv, bt470bg/unknown/unknown, progressive), 1920x1080 [SAR 1:1 DAR 16:9], 30 fps, 30 tbr, 90k tbn, 60 tbc

Side data:

cpb: bitrate max/min/avg: 0/0/0 buffer size: 1425408 vbv_delay: N/A

Stream #0:3[0x103](und): Audio: ac3 (AC-3 / 0x332D4341), 48000 Hz, mono, fltp, 640 kb/s

This shows four "streams" (tracks), two audio and two video. Extracting the first audio track, listed as "Stream #0:1", can be done with the following command:

1

ffmpeg -i ATSC-signal.ts -map 0:1 track1audio.wav

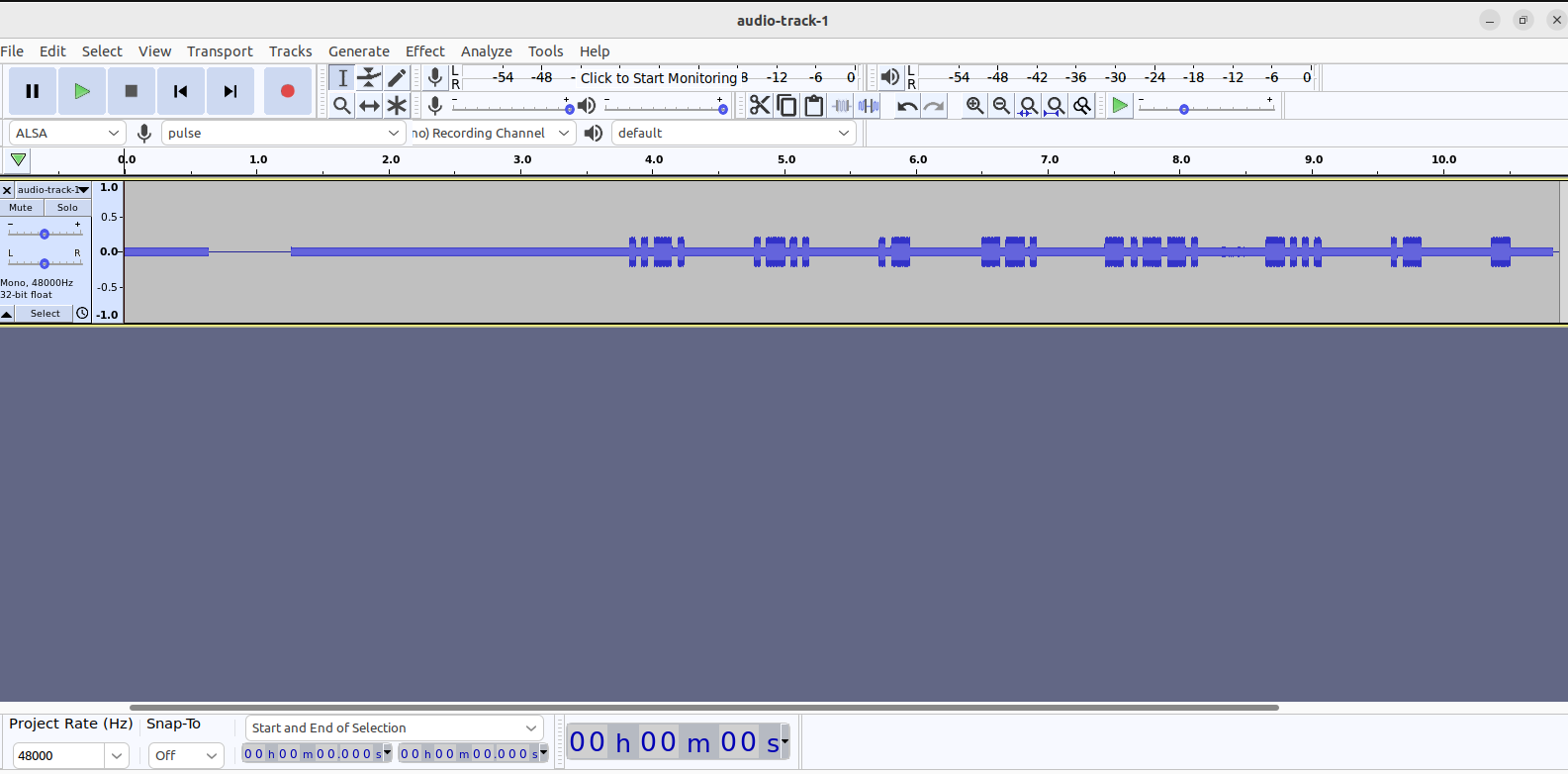

This results in a .WAV file that you can now process with any audio processing program, including Gnu Radio. For example, processing this file in Audacity (FOSS audio processing program), we see the entire signal in the time domain:

We can pipe this audio to the CW decoder of your choice. I prefer using `multimon-ng` in the terminal:

1

multimon-ng -a SCOPE -a MORSE_CW

This will decode even the sped-up CW tones, although Audacity can slow down the playback through the "Effect --> Change Speed" option.

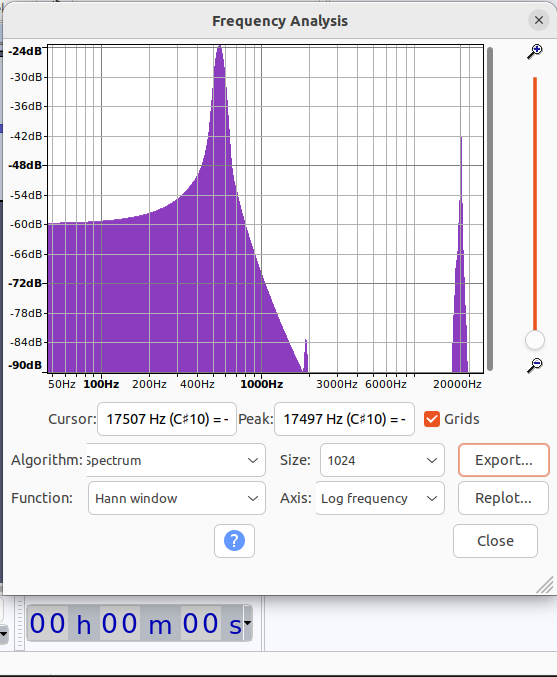

It may not be clear from the time-based view, but there's an additional subcarrier in the audio track 1. You can see it as what would appear to be low-amplitude noise around the center point of the signal. However, if we select this signal with CTRL+A and then choose "Analyze--> Plot Spectrum" (or, view the FFT in GNURadio using a WAV source), we see the extra subcarrier very clearly around 17.5kHz:

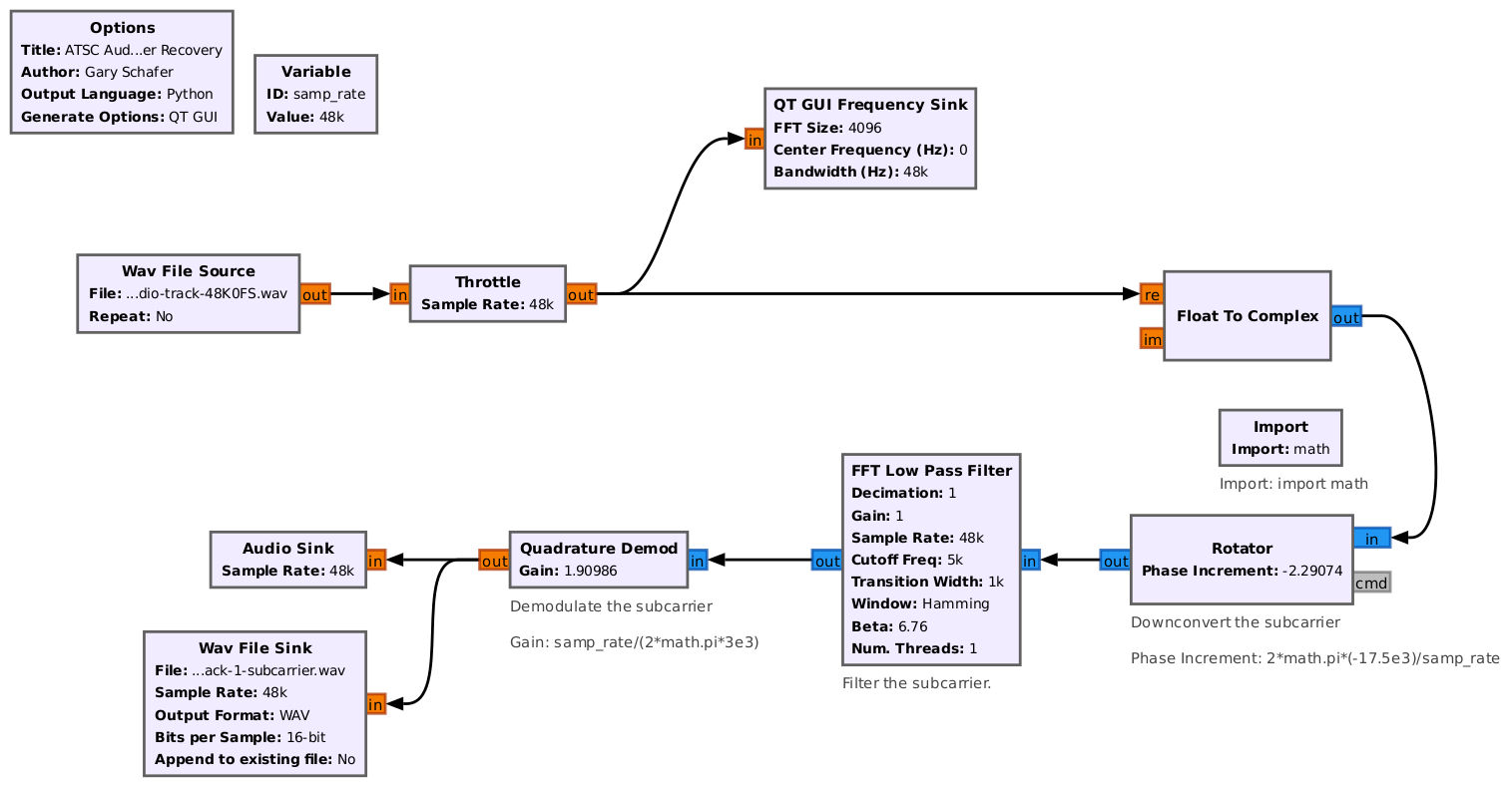

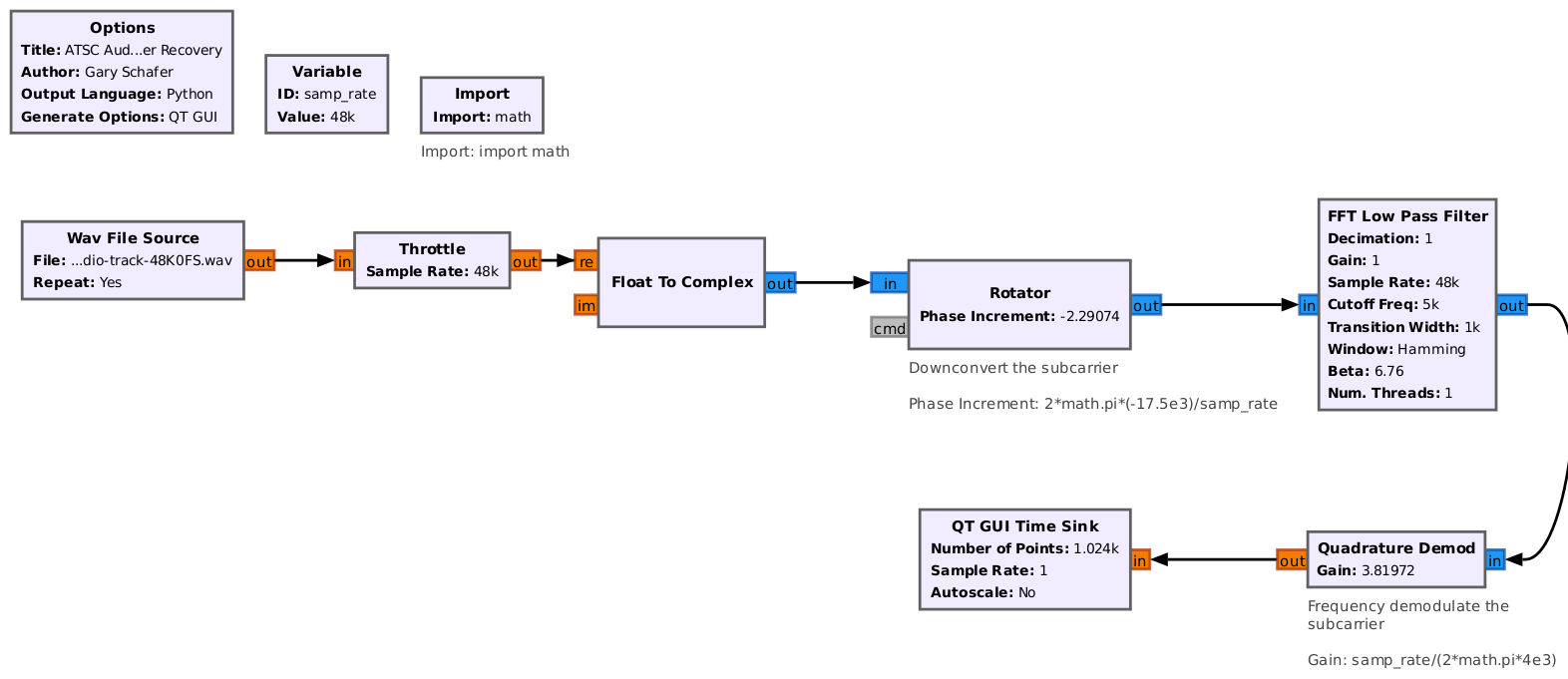

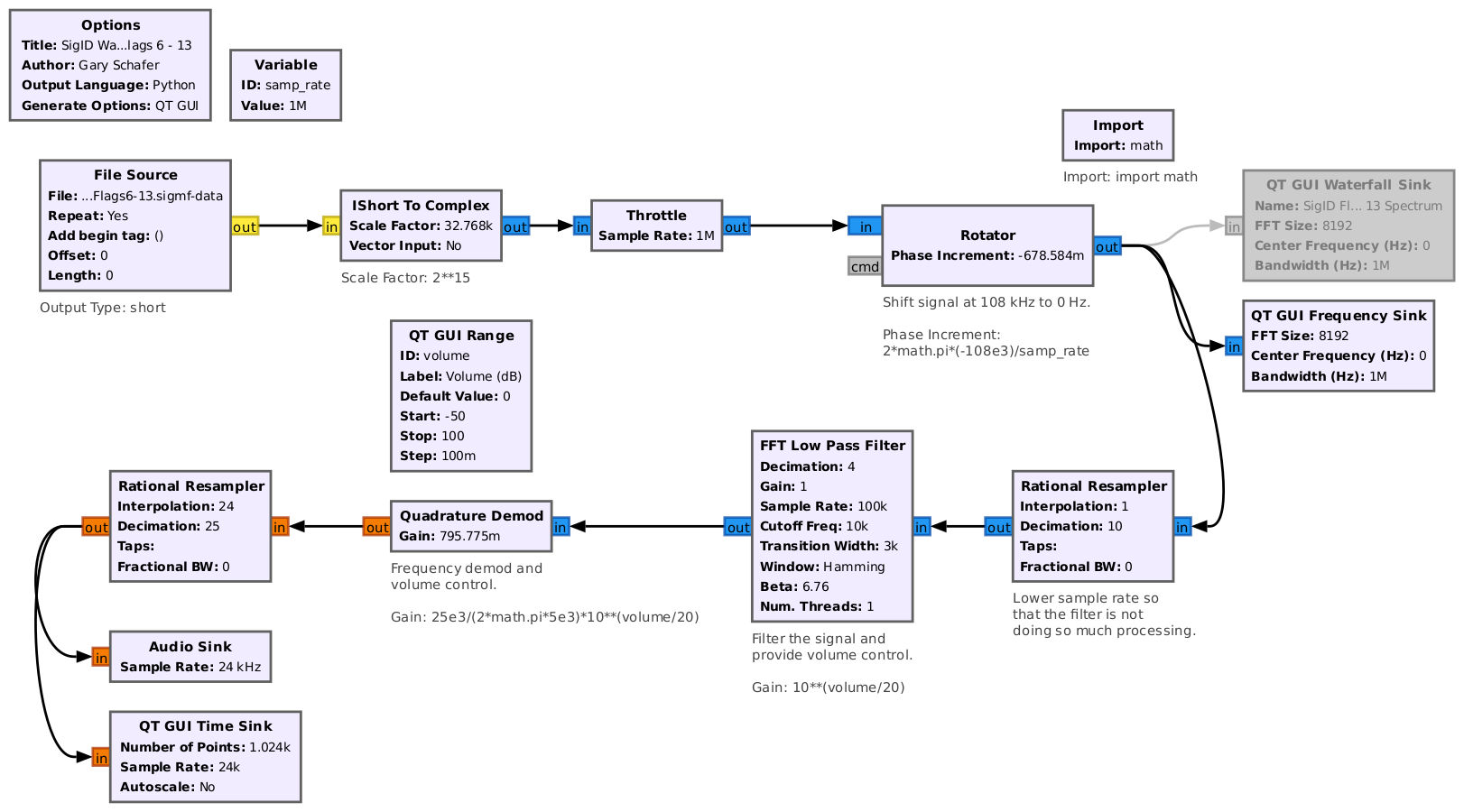

We can use GNURadio to isolate and demodulate that subcarrier, using our recorded .WAV file as an input. This flowgraph shifts the 17.5kHz signal to baseband, filters it, and demodulates it as NBFM (using quadrature demod). We probably didn't know at first that it was NBFM, but looking at the shifted signal (using a frequency sink or waterfall sink), it would quickly be evident this is the case.

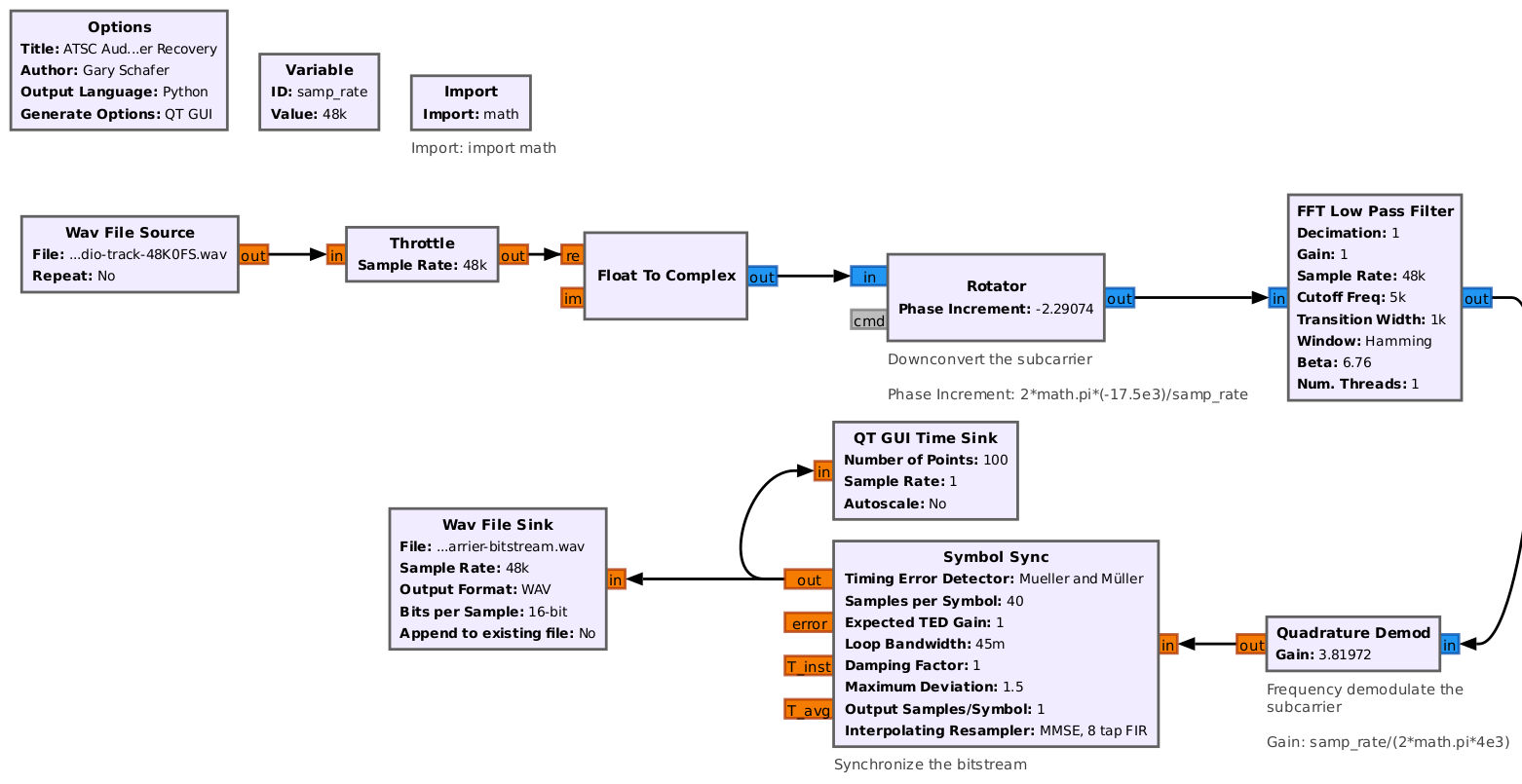

In an additional twist, listening to the output, it is unitelligible. That's because it is time-reversed. Therefore, we save the baseband audio out as a WAV file, then reverse it in Audacity ("Effect --> Reverse" menu). Finally, we hear the flag (and a congratulations message!) dictated.

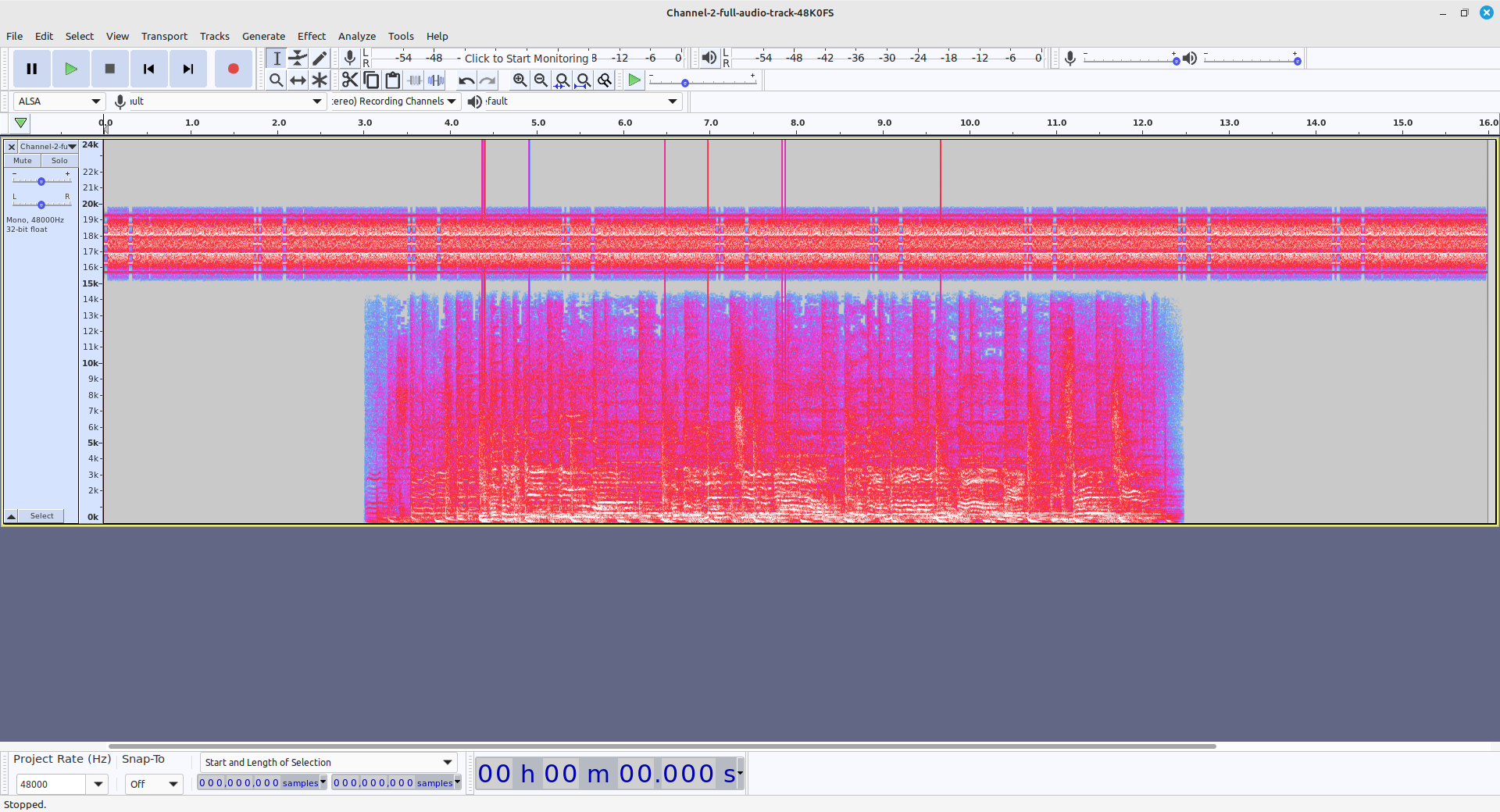

Extracting the second audio track, using the same ffmpeg command as above, but with "-map 0:3", you get a .WAV file that, when opened in a similar fashion, shows this spectrogram. Note, you can also record the audio directly out to Audacity by directing Audacity's input to the output of VLC when audio track 2 is playing.

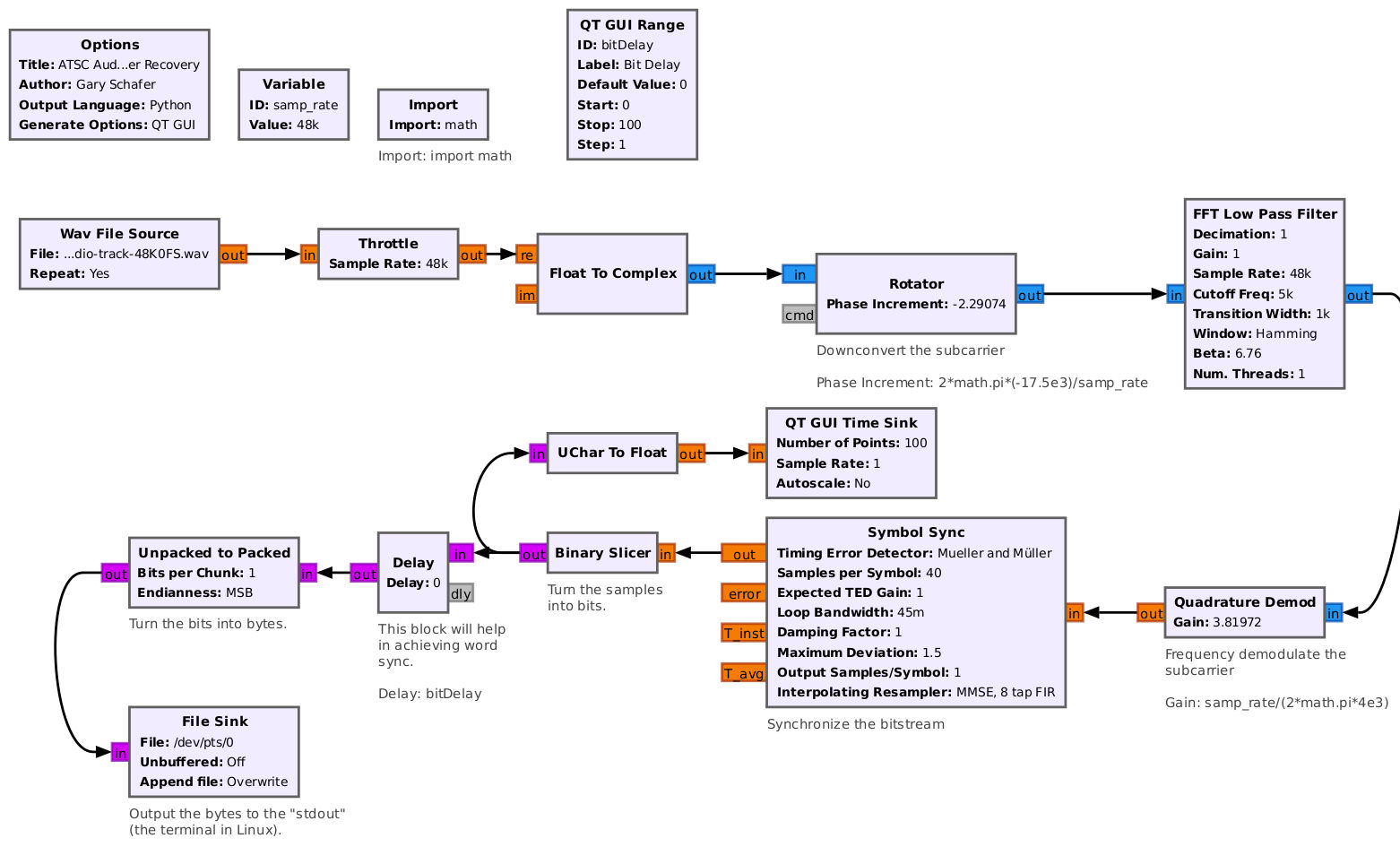

It's not clear immediately what's going on, but we can use GNURadio to shift the bottom signal to baseband and then investigating it. By viewing the FFT output of the shifted signal, you should see this is a 2FSK signal

This flowgraph downconverts, demodulates and displays the signal on the QT GUI TIme Sink (assuming a FM modulation of some kind). To determine the number of samples per symbol, the "Sample Rate" of the time sink has been set to "1". With this setting, any measurements will be "samples".

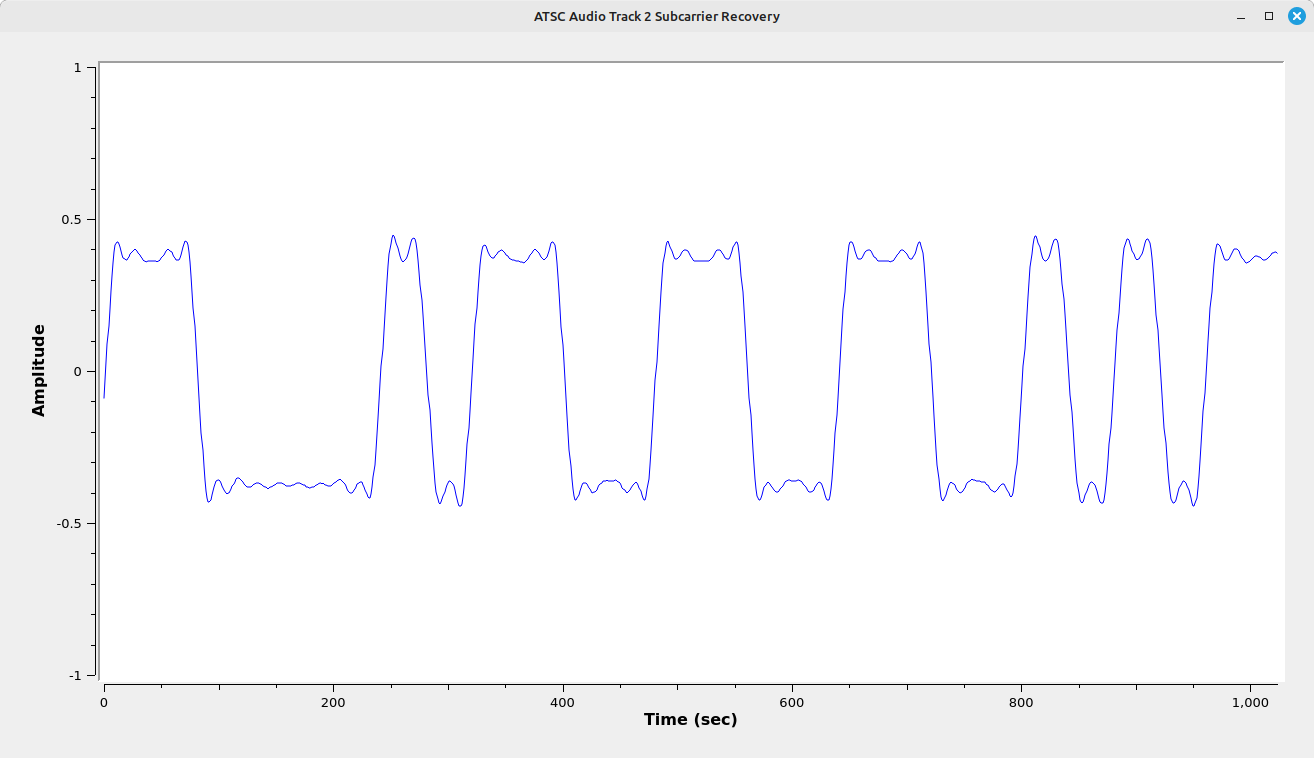

The output of this flowgraph is a time domain signal that appears to be a bitstream.

The sample rate of this QT GUI Time Sink has been set to 1, so the horizontal axis is not "sec", but "samples". This makes it relatively straightforward (if not easy) to calculate the number of samples per symbol. This can be done by placing the cursor on either side of the narrowest bits at the halfway point vertically. In this case, the value is roughly 40 samples per symbol. After recovering the raw data stream, its possible to use the Gnu Radio "Symbol Sync" to recover the bits. Using the value of the samples per symbol calculated from the time domain display above (40 samples per symbol), the "Symbol Sync" block will output raw bits.

After recovering the raw data stream, its possible to use the Gnu Radio "Symbol Sync" to recover the bits. Using the value of the samples per symbol calculated from the time domain display above (40 samples per symbol), the "Symbol Sync" block will output raw bits.

FSK is, by its very nature, a differential transmission method. Hence, a differential decoder is not needed (as would typically be the case with a PSK signal). The only issue is how to determine what the bits mean. ASCII is a very common method (hence the hint mentioning "ASKEY"). The "Symbol Sync" provides bit sync, but there's still the issue of aligning the bytes to recover the ASCII properly. That's the purpose of the "Delay" block. To run the flowgraph, make sure the "stdout" (typically a terminal) is open. Once the flowgraph is running, increase the delay until text appears in the terminal. NOTE: Due to the relatively short amount of time of the recording before it starts to repeat, the byte alignment will probably need adjustment between repeats.

Another option is saving the subcarrier as a complex file, then importing it into Universal Radio Hacker (URH). URH provides some basic tools that allow for easier "bit-busting", especially with digital too

Opening the resulting file in Universal Radio Hacker (URH), I got the following initial view. I'd adjusted the samples/symbol to "1" (the "Symbol Sync" block took care of that) and the "Error Tolerance" to "0". From the "Analysis" page, you're presented with a bitstream. Changing the "View Data As" from "Bits" to "ASCII", you'll probably get a string of random characters along the top. Right-clicking on the bitstream, you get the option to make the stream "Writeable". Doing so allows you to go back to viewing as "Bits", delete the first bit, switch back to "ASCII" and see if any text appears. Rinse and repeat if you get weird, random characters. At some point, you should see text appear.

Catch ‘Em All

Coming soon

This was a

Hop to It

Coming soon

In-Person

Coming soon

Never the Same Color (revisited)

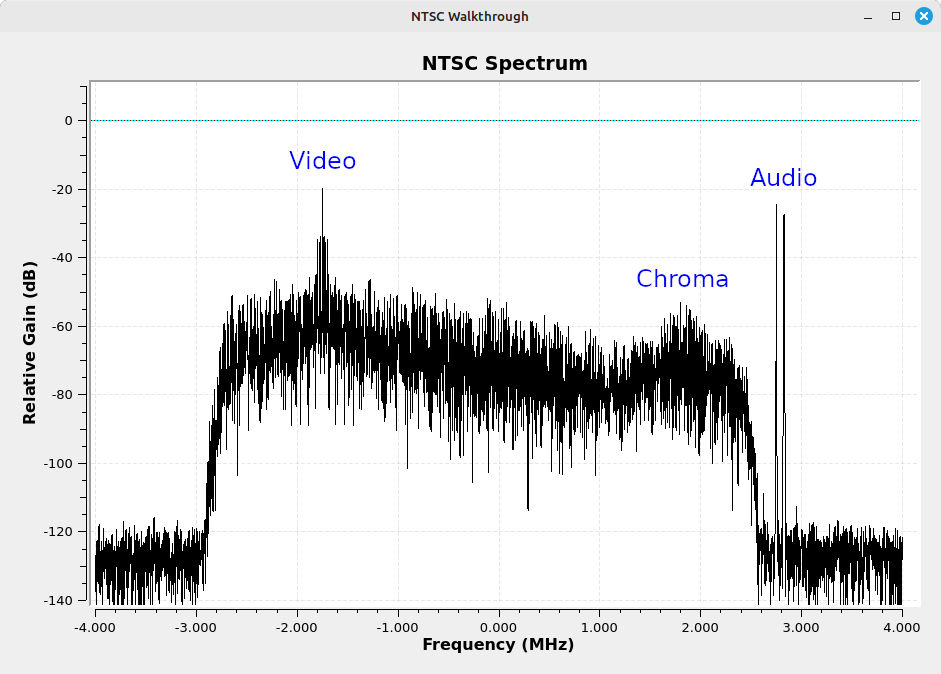

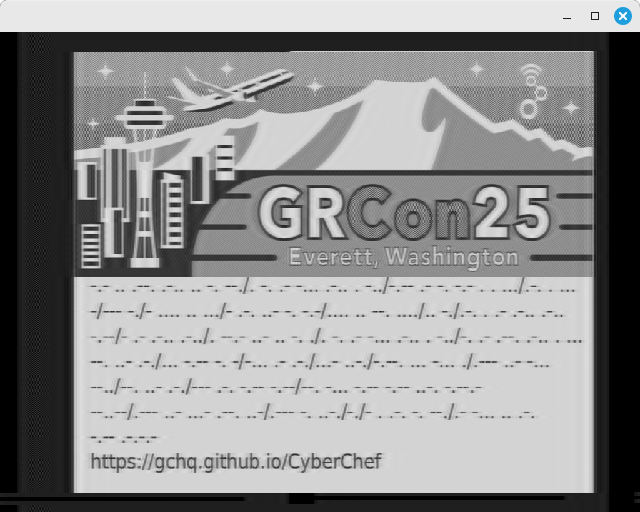

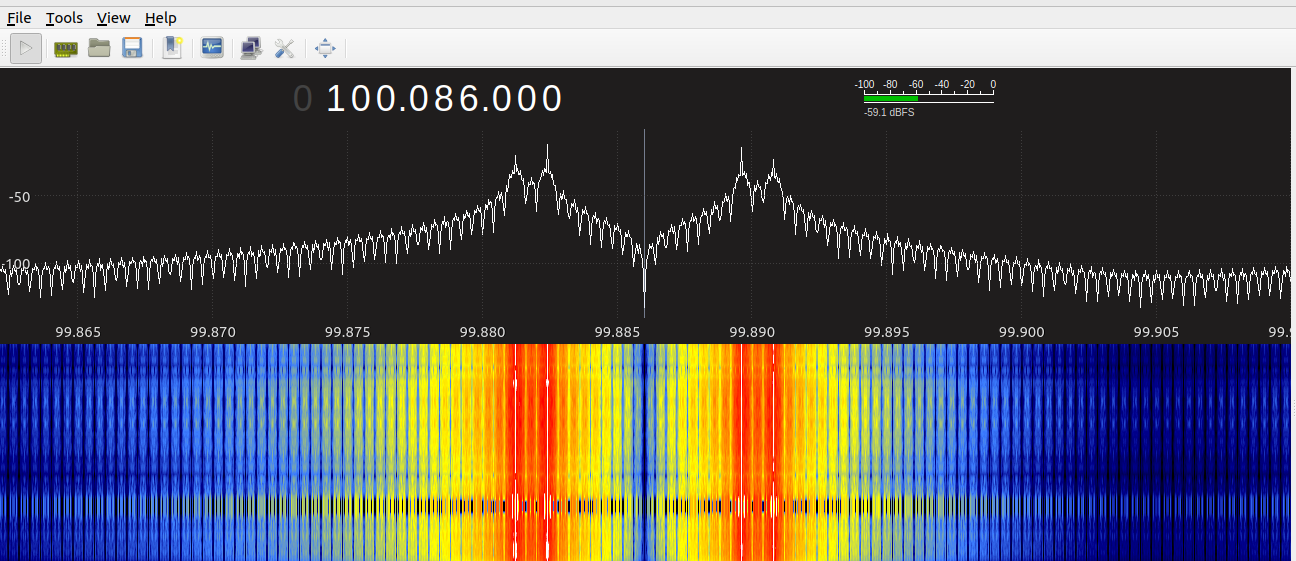

Never the Same Color is a play on the abbreviation for National Television System Committee (NTSC), the original US analog television standard created in the 1940s. From a signal standpoint, the NTSC standard defines both the baseband (information) signal in addition to the modulated signal. Unlike ATSC, where all the information is contained within a single bitstream, the NTSC standard uses multiple carriers for video, audio and chroma (color) information. Viewing the spectrum of the IQ file, you should see the following:

Audio

We'll start with the easier signal, the audio. NTSC audio is analog frequency modulation (FM) on a main carrier that is 4.5 MHz higher in frequency than the video carrier. NTSC allows for multiple audio carriers, with the main for the normal audio, and additional ones for secondary audio programming (SAP) and other uses. The spectrum shows what appears to be two audio carriers.

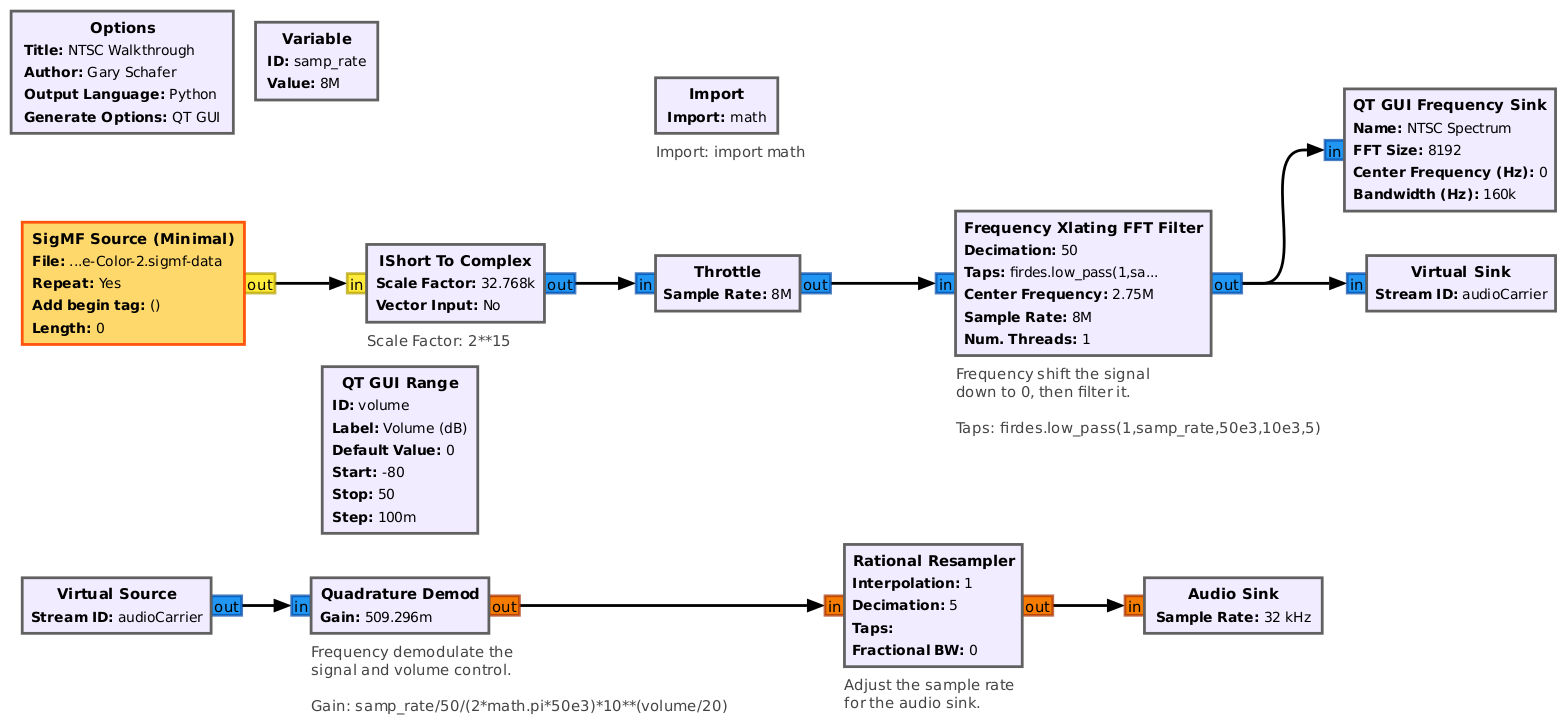

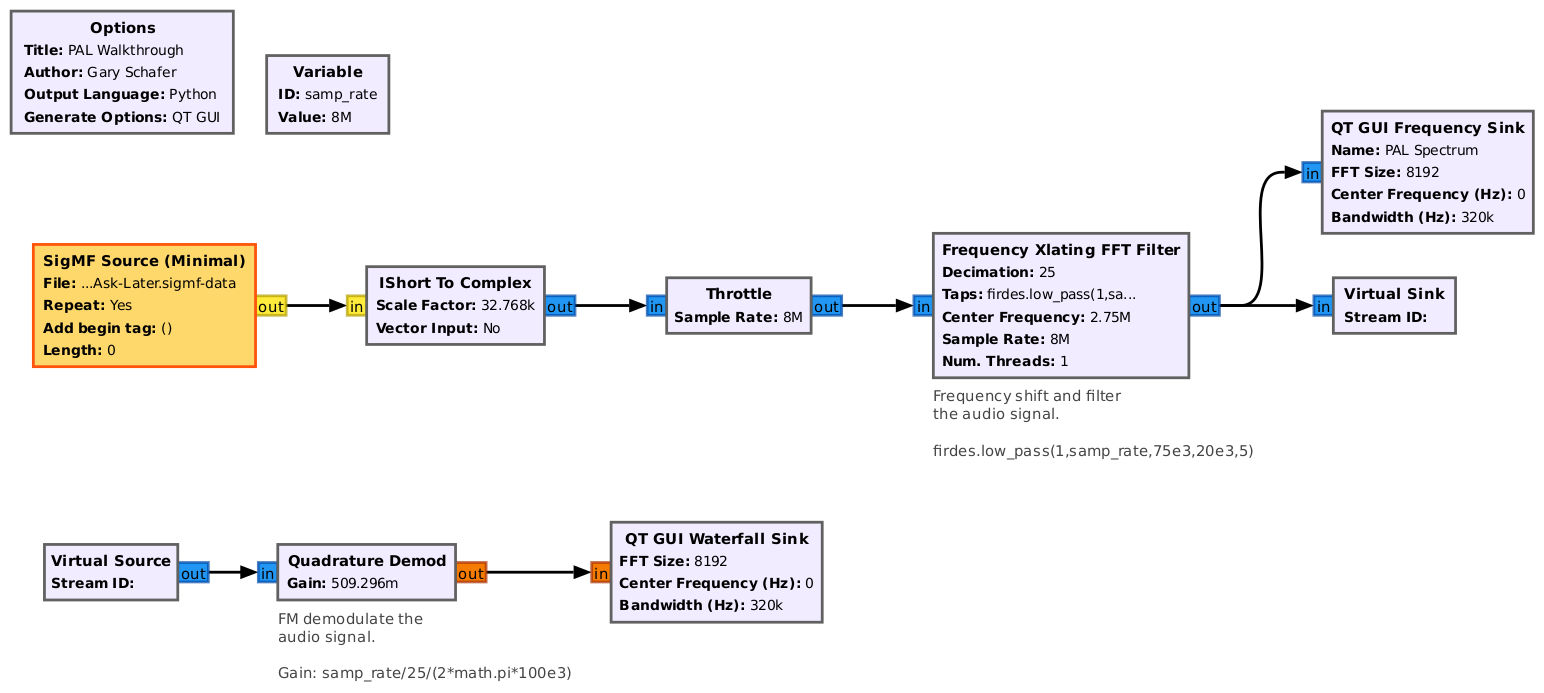

Let's put together a flowgraph that will tune to, filter and demodulate these audio carriers. Since we're not centered on the video carrier, we need to determine how much of a frequency shift to use to center the two audio signals. We can use the spectrum and a mouse cursor to determine this. The first audio carrier is 2.75 MHz from the center. We can use the following flowgraph:

The flowgraph above will recover the audio from the main carrier, which is flag 2. Shifting the center frequency in the "Frequency Xlating FFT Filter" to 2.829 MHz, the center frequency of the other audio carrier and the carrier used for Secondary Audio Programming (SAP), gives you a clue for solving flag 1, which is on the video signal.

Video

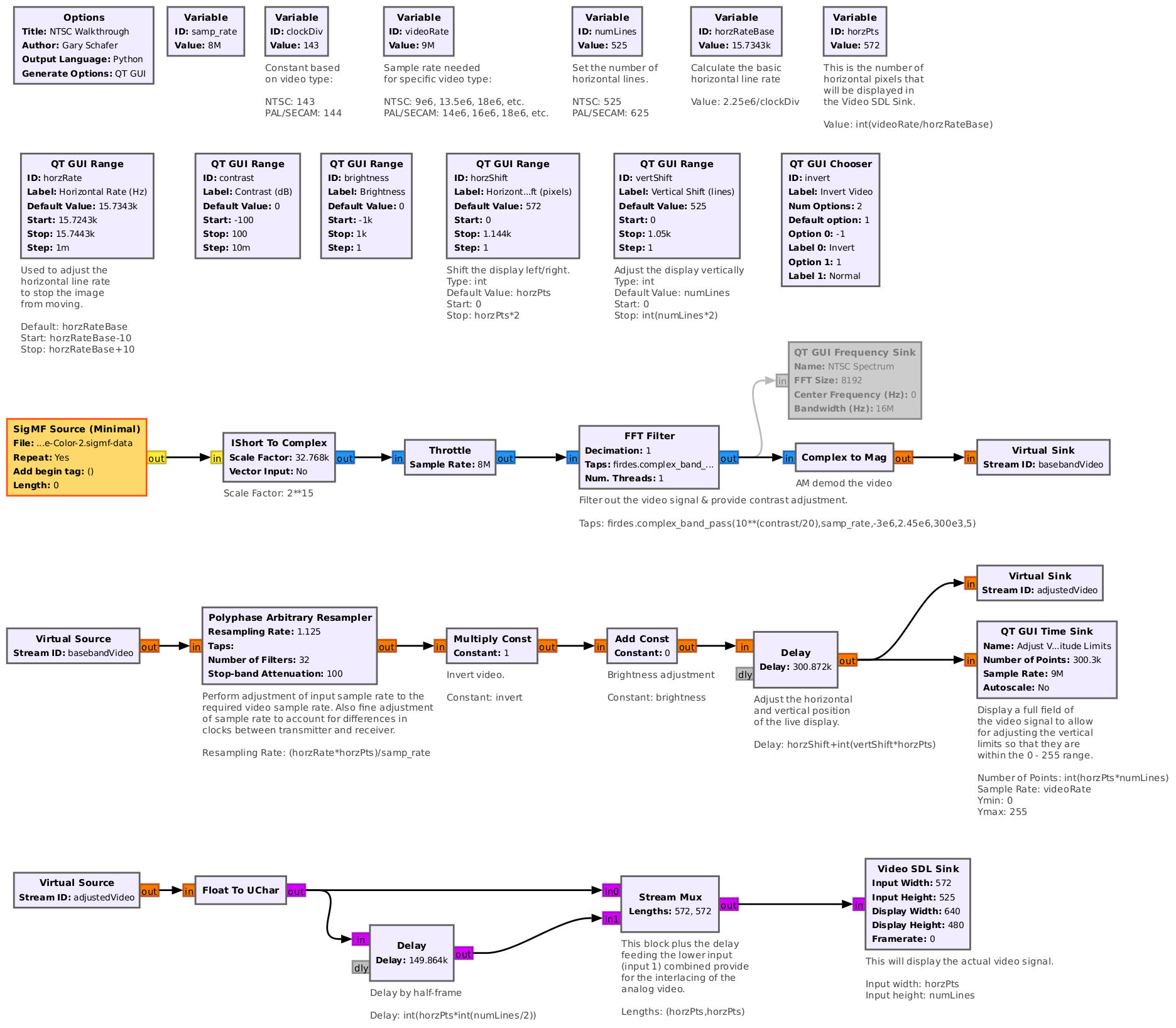

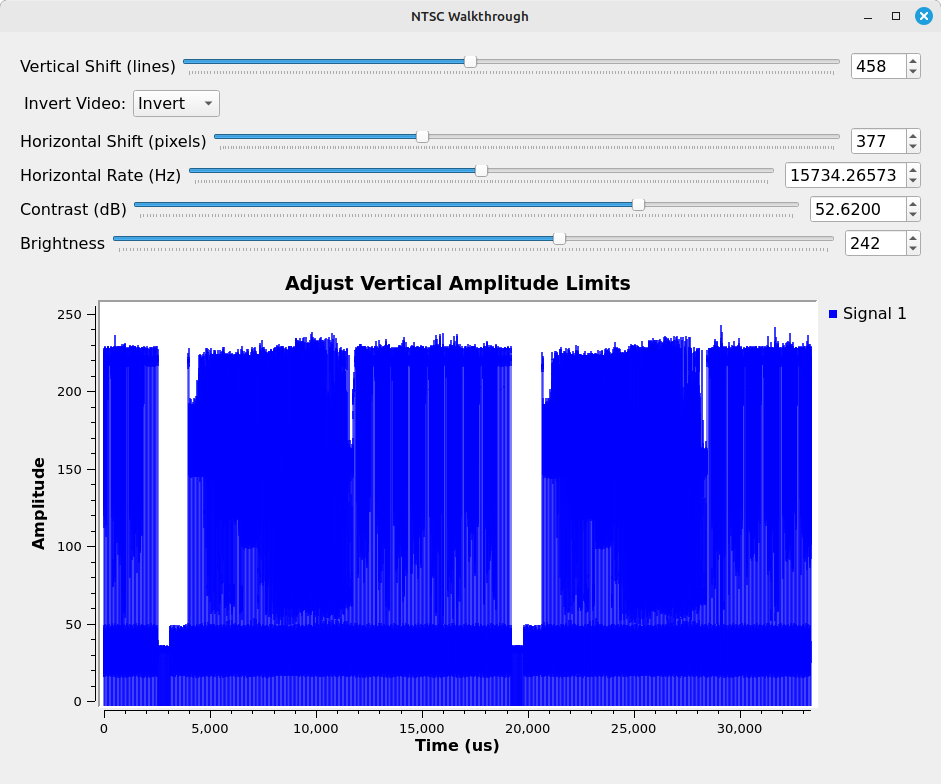

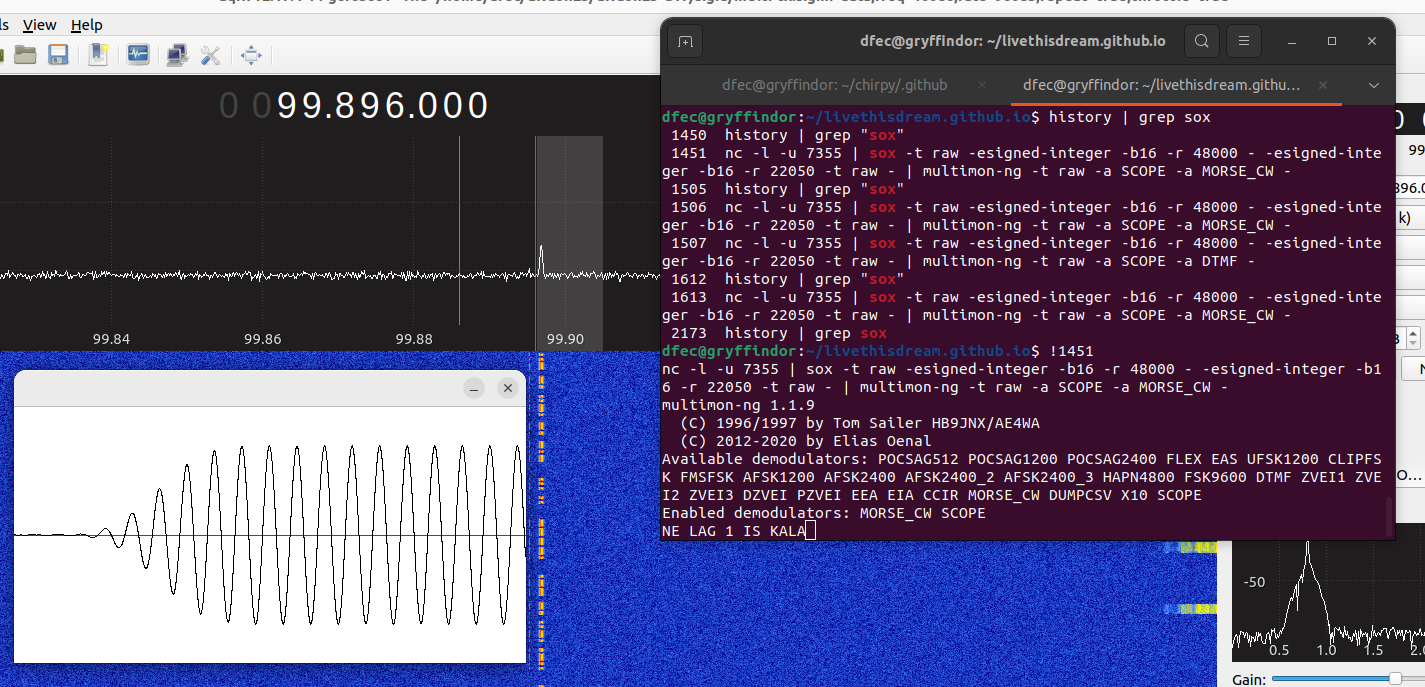

Now for the fun part, going after the video signal. NTSC video is modulated onto the carrier using a form of amplitude modulation (AM) known as "vestigial sideband" or "VSB" modulation. In this modulation, the upperside is left intact, and the lower sideband is filtered to remove most of the higher frequencies, leaving only a portion or "vestige" (hence the name) of the lower sideband. Regardless, it's full carrier, meaning it can be demodulated with noncoherent methods. The video carrier is centered at -1.75 MHz. But due to the AM full carrier modulation, we can simply filter the video signal, then run it through a "Complex to Mag" block to extract the baseband video. From there, we need to ensure the clock rate is set for the appropriate video format. Here's the full flowgraph for analog video. NOTE: This flowgraph will work with NTSC, PAL, or SECAM. You only need to adjust certain parameters within the flowgraph before running it.

Running this flowgraph, adjusting various parameters as described above, we get:

Properly adjusting the parameters, leads to:

From here, the previous clue provided in the SAP (Secondary Audio Programming) signal and the hyperlink provided at the bottom of the video display need to be used. The clue says that the display is encrypted with Viginere. Opening up the URL provided (https://gchq.github.io/Cyberchef), we can use the clue from the audio streams to decode the encrypted text.

Please Ask Later

"Please Ask Later" is a play on the abbreviation for PAL, or Phase Alternating Line, aka "Peace At Last". PAL is a form of analog modulation that was used in most western European countries until digital television replaced it. From a general viewpoint, it has many of the same characteristics as NTSC, but some key things are different. This includes the timing, the overall bandwidth, and spacing between video, audio, and chroma. We're going to reuse the same flowgraphs as with NTSC, but will have to adjust some of the parameters. Just as we did with NTSC, we'll go after the audio first.

Audio

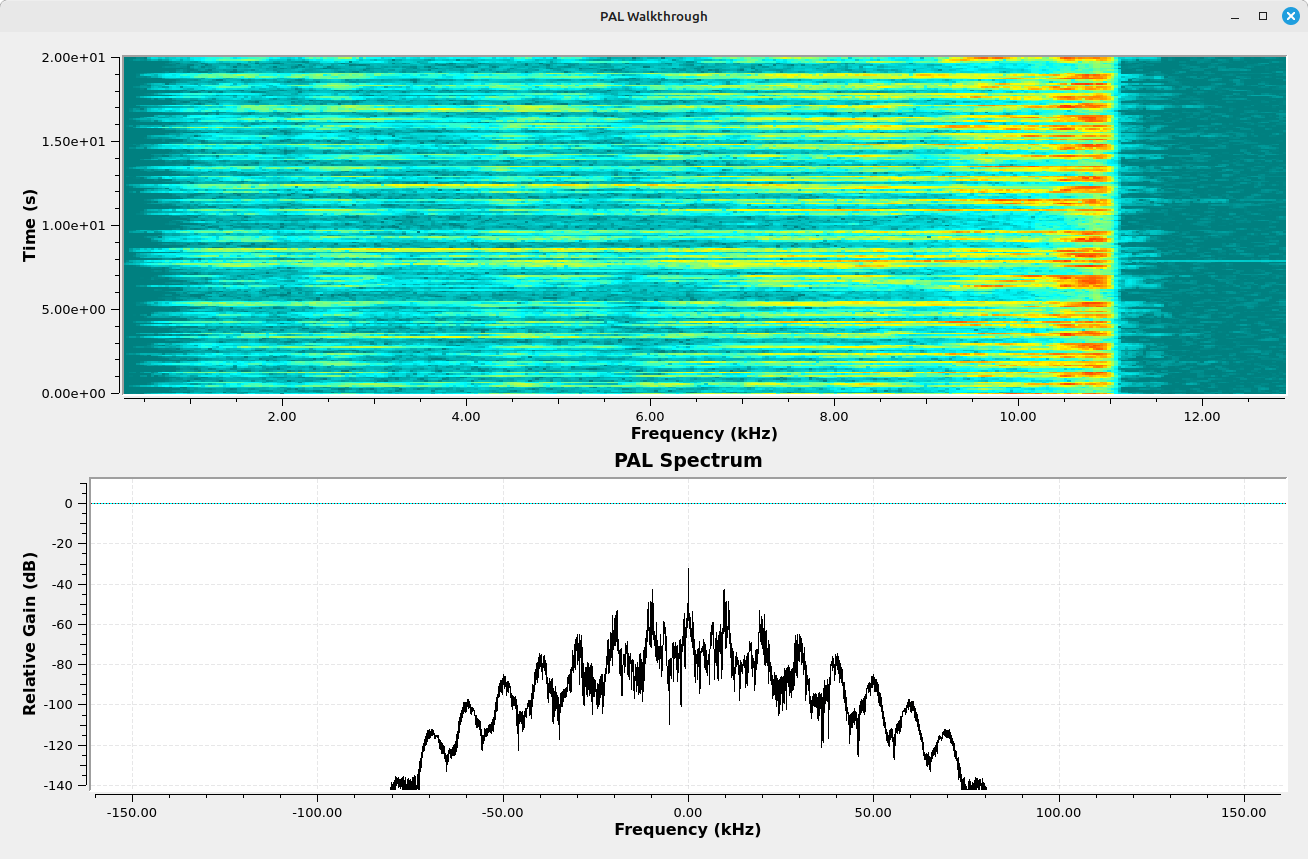

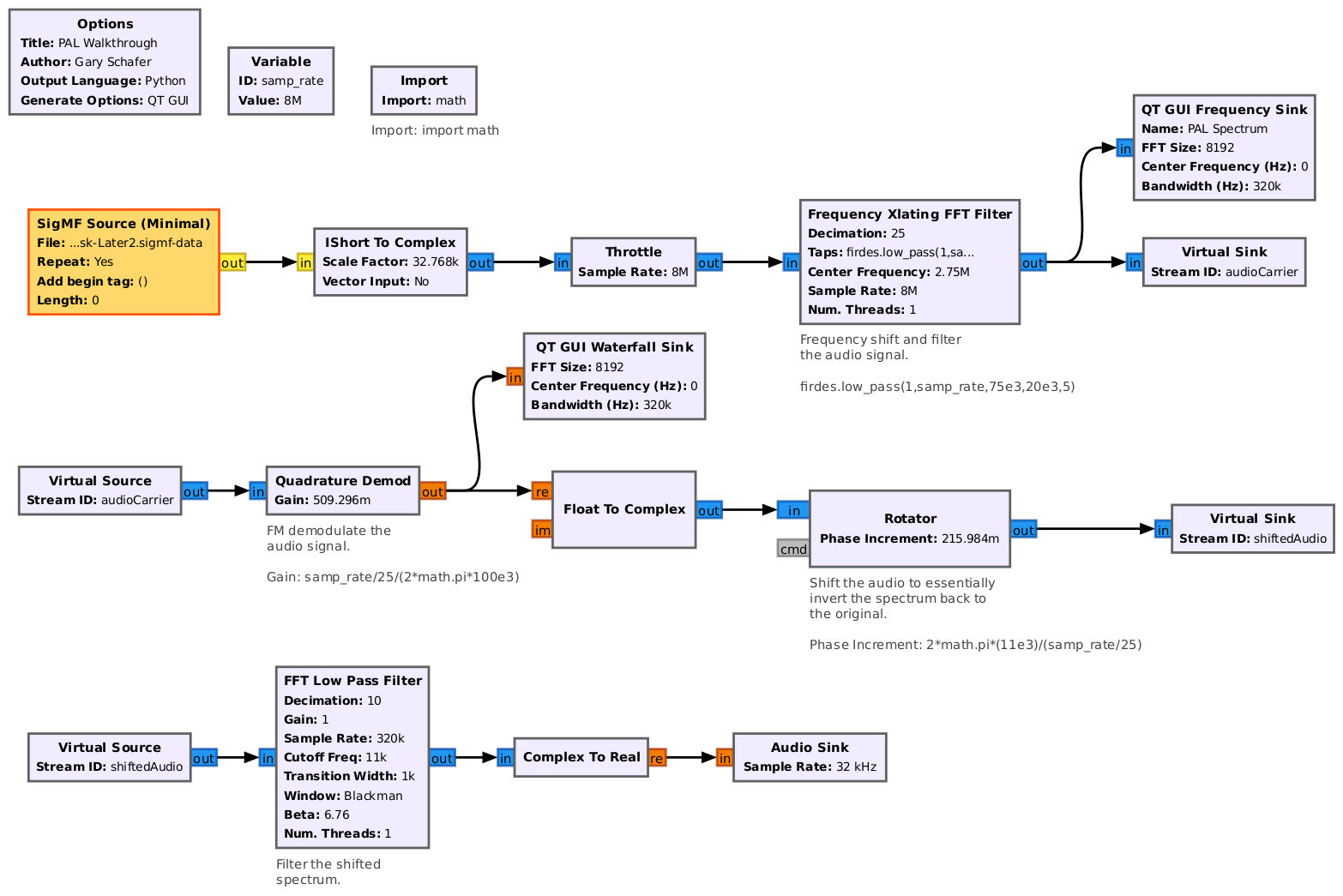

Listening to the audio initially, it sounds wrong. Using a "Waterfall" sink, it shows that the audio energy is concentrated around 11 kHz. It's as if the spectrum has been inverted. We'll adjust the flowgraph to account for this and make the audio sound correct and we will hear the flag.

Video

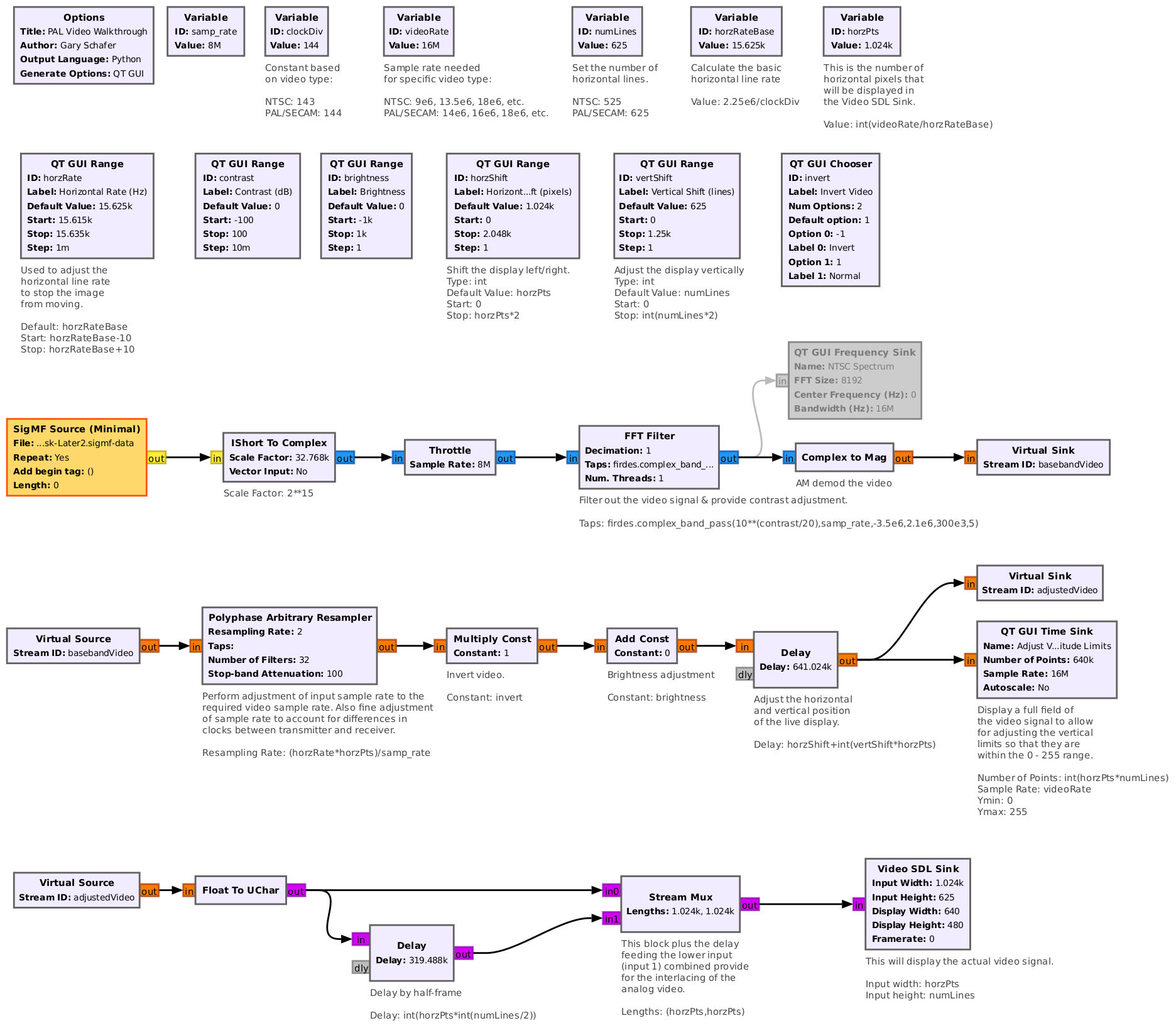

For the video portion, I'm going to use the same flowgraph as with the NTSC video, but I'm going to change the necessary parameters. Those parameters are: - The variables "clockDiv", "videoRate", and "numLines". - The input file (obviously) to change from the NTSC IQ file to the PAL IQ file. - The low and high frequencies of the FFT filter. The final flowgraph is below:

The display shows what appears to be Morse code. Again, using the URL at the bottom, the "From Morse Code" decode block, and typing in the first, three lines of Morse code, we get the following:

Decoding the Morse Code leads to some unintelligible text, but the free hint was “The first displayed letters are KEY.” If you take the first letters of the output box above, they spell out “key rot thirteen”, as in “key ROT13”. Translating the remaining Morse code, you get several words which appear like gibberish. Adding in the ROT13 block, the final text (including the flag) appears.

Signal Identification

This challenge consisted of two parts, both containing common RF signals used in the amateur radio band.

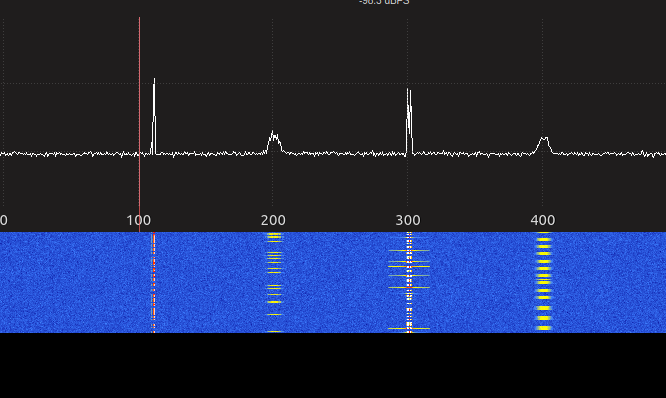

Part 1 (@livethisdream)

This is a 960kHz spectrum consisting of four signals and five flags.

The signals are, from left to right: CW, APRS, DTMF, and M-17 (voice and text).

CW

This is USB modulated morse code, which we can demodulate using a number of techniques. My favorite comes from the GQRX blog, and uses GQRX to filter and demodulate the signal, then streams the baseband samples to multimon-ng using UDP. We simply enusre the UDP button is active in the baseband signal window on the bottom right of GQRX and set the port to 7355. Then, the one line bash command is:

1

nc -l -u 7355 | sox -t raw -esigned-integer -b16 -r 48000 - -esigned-integer -b16 -r 22050 -t raw - | multimon-ng -t raw -a SCOPE -a MORSE_CW -

APRS

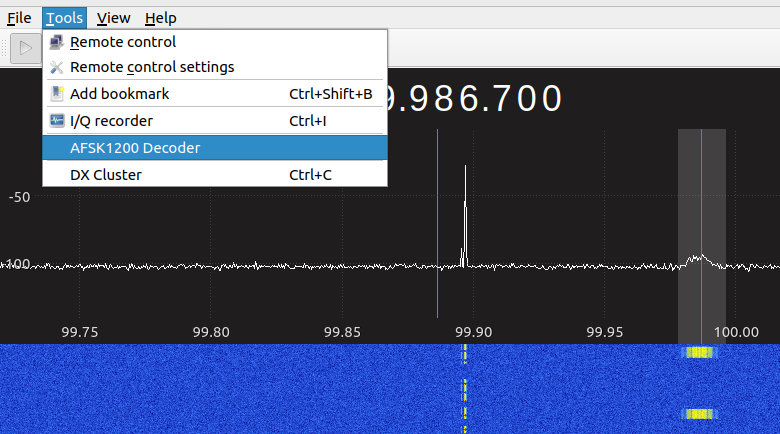

The second signal from the left is APRS, which uses AFSK1200 and can be decoded using a NBFM decoder combined with the built-in AFSK1200 decoder in GQRX:

We may also use direwolf in the terminal by intercepting the UDP packets. The command is:

1

direwolf -r 48000 udp:7355

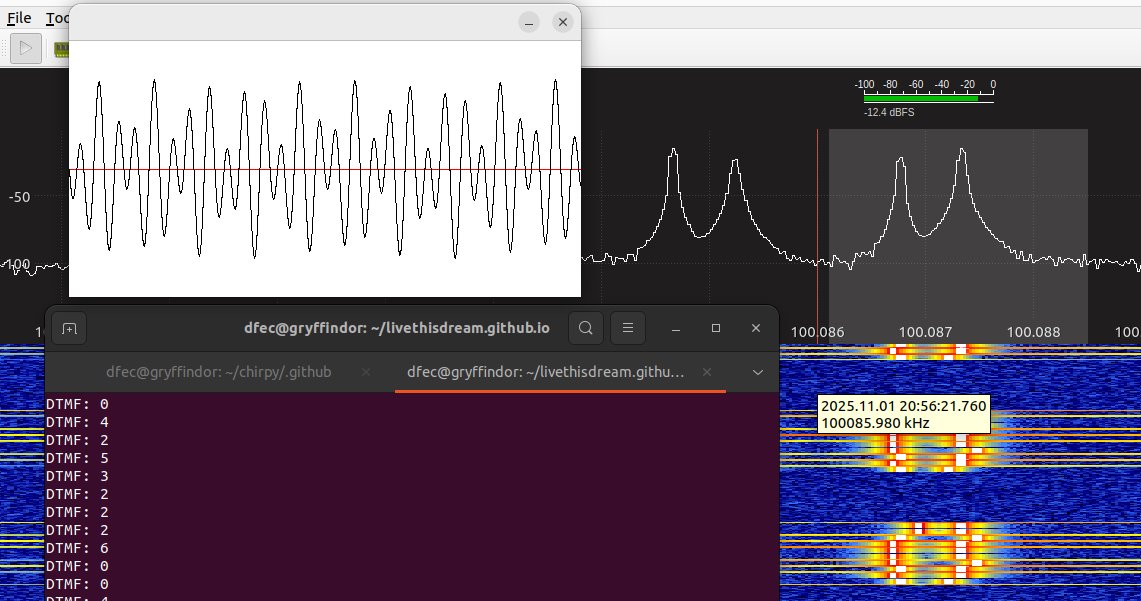

DTMF

The third signal from the left is DTMF (old-school cool). The flag is the numerical key sequence. As with CW and APRS, we can use USB or LSB demodulator to stream the samples to multimon-ng:

1

nc -l -u 7355 | sox -t raw -esigned-integer -b16 -r 48000 - -esigned-integer -b16 -r 22050 -t raw - | multimon-ng -t raw -a SCOPE -a DTMF -

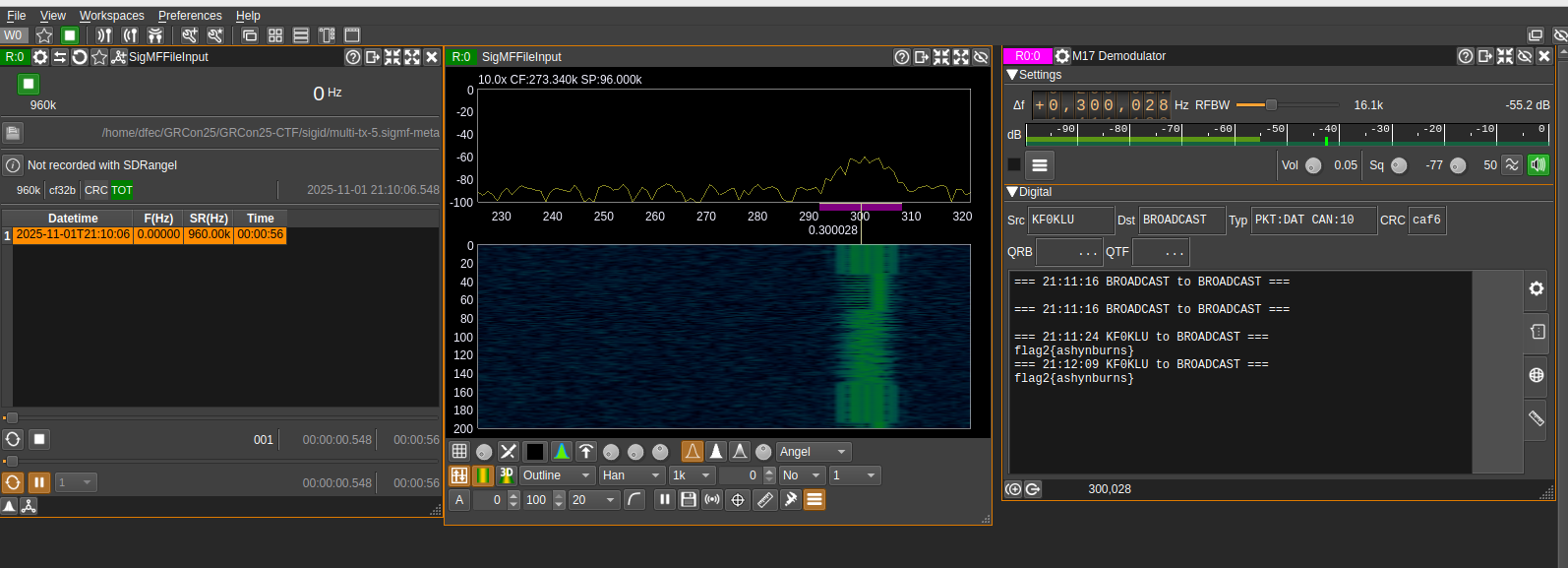

M-17

The signal on the far right is M-17, which can be decoded in GNURadio or SDRAngel. I prefer SDRAngel for its simplicity. The signal contains two flags: one voice and one text (SMS). To find the voice text, we expand the RF bandwidth to about 16kHz, reduce the squelch threshold, and reduce the volume of the output (I found 0.1 to be a good value). Note, when using Ubuntu, I found SDRAngel’s M-17 plugin often crashes. The best approach to avoid a crash is to set all the values before playing the file back, then starting the file playback and moving the tuner to capture the signal. It’s suboptimal, but I have experienced the bug for the last several years, only on Ubuntu.

Part 2 (@jesternofool)

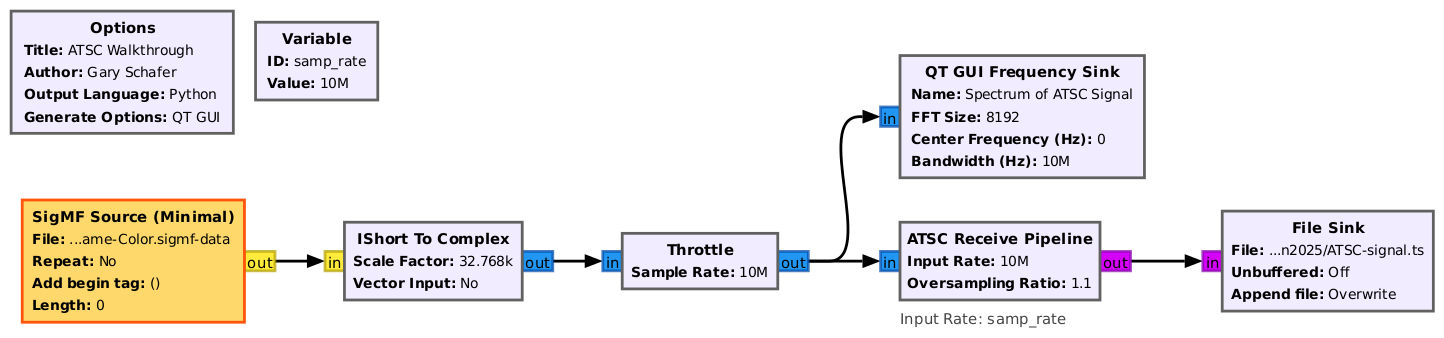

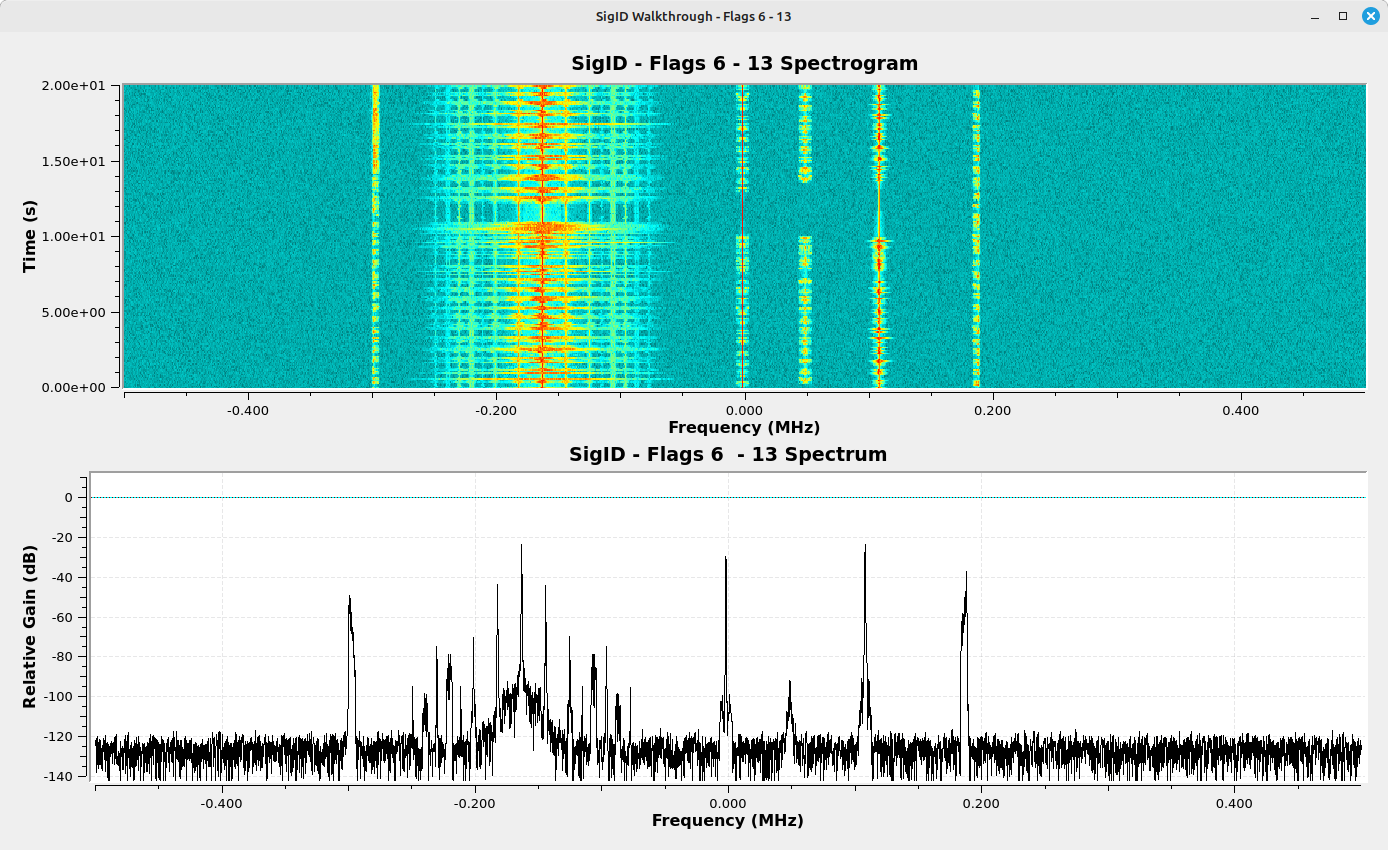

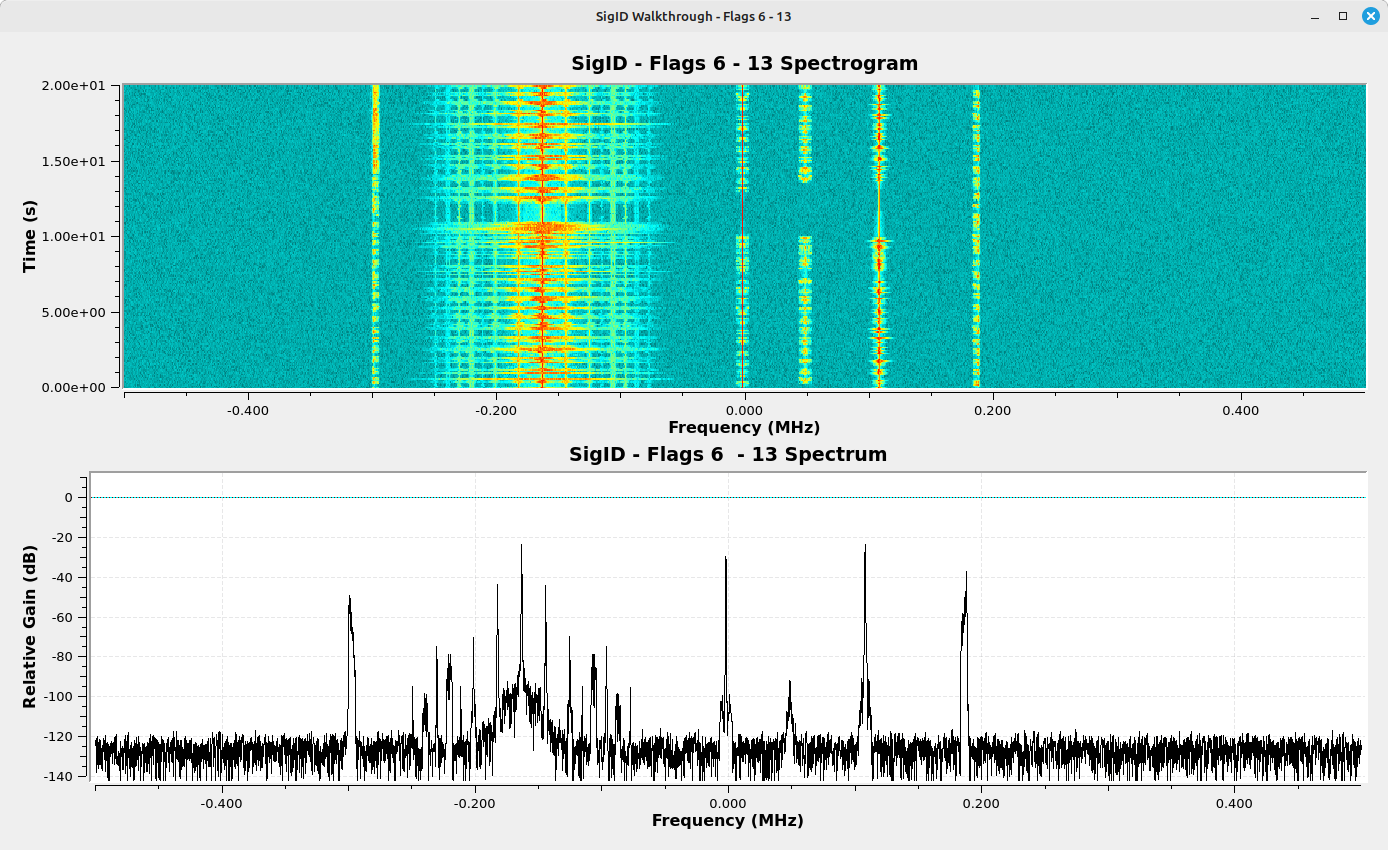

This is a 1 MHz spectrum consisting of six (6) signals total.

AM-LSB

Working from left to right in this spectrum, the first signal is an amplitude modulated (AM) single sideband using the upper sideband (USB) and with a suppressed carrier (SC). Its center frequency (the frequency where the carrier would be if it were not suppressed) is at roughly -300 kHz. The modulation can be determined based on the waterfall. The spectrogram shows a signal that has energy that is concentrated on the lower portion of the spectrum. This is indicative of AM-USB. And since there’s no consistent line on the left side, it’s a suppressed carrier.1

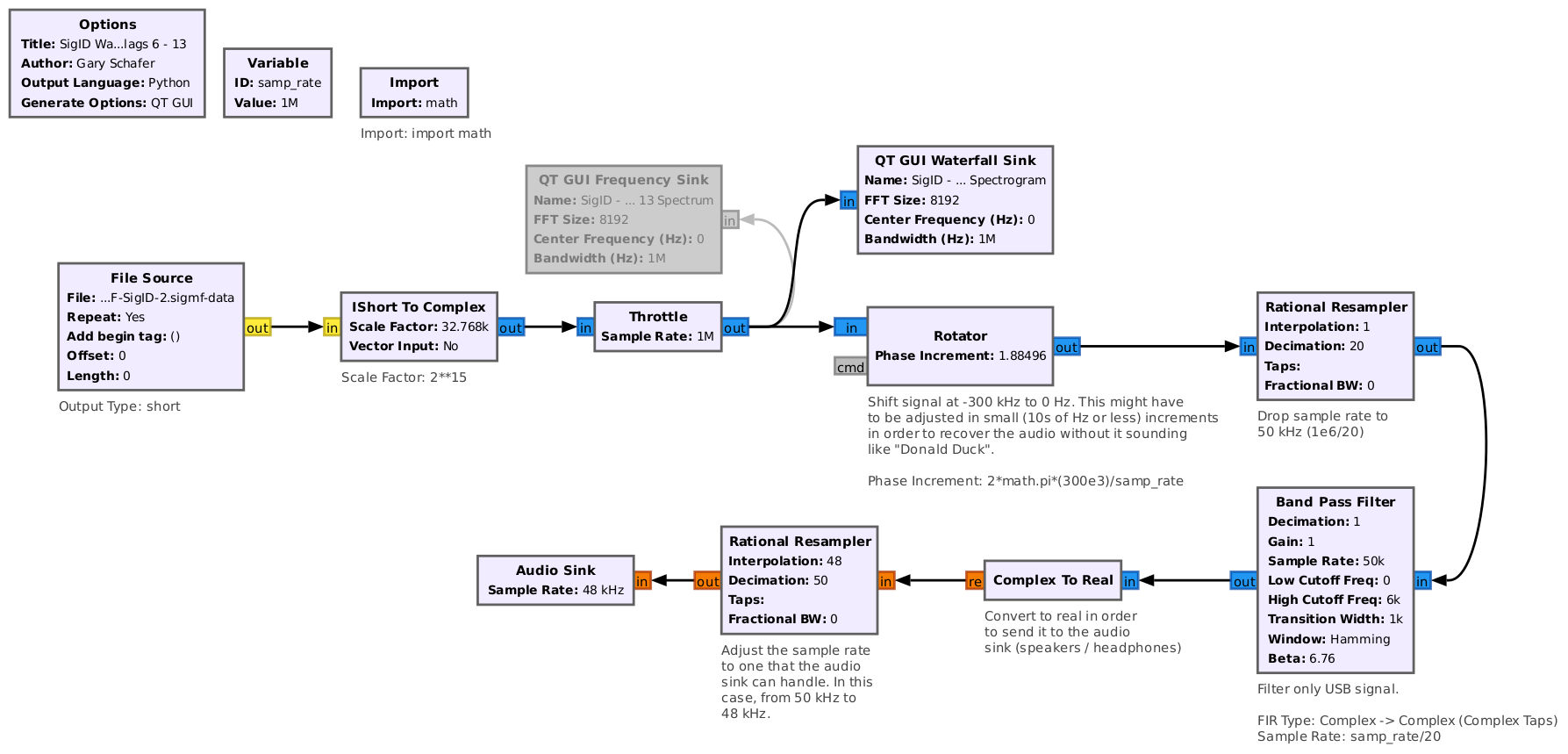

This signal can be recovered using the AM-LSB demodulator of GQRX, or using the following GNURadio flowgraph:

Note, the "Rotator", "Rational Resampler", and "Band Pass Filter" could easily be replaced with a "Frequency Xlating FIR Filter" block, which performs the shift, filter, decimate functions (in that order).

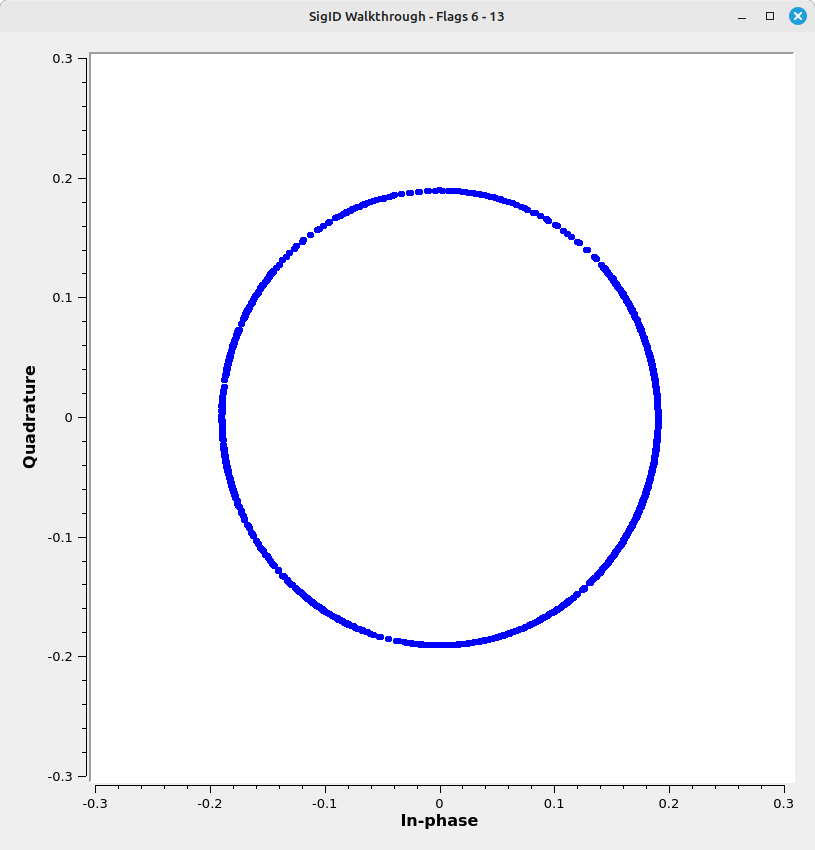

Wideband FM (WBFM)

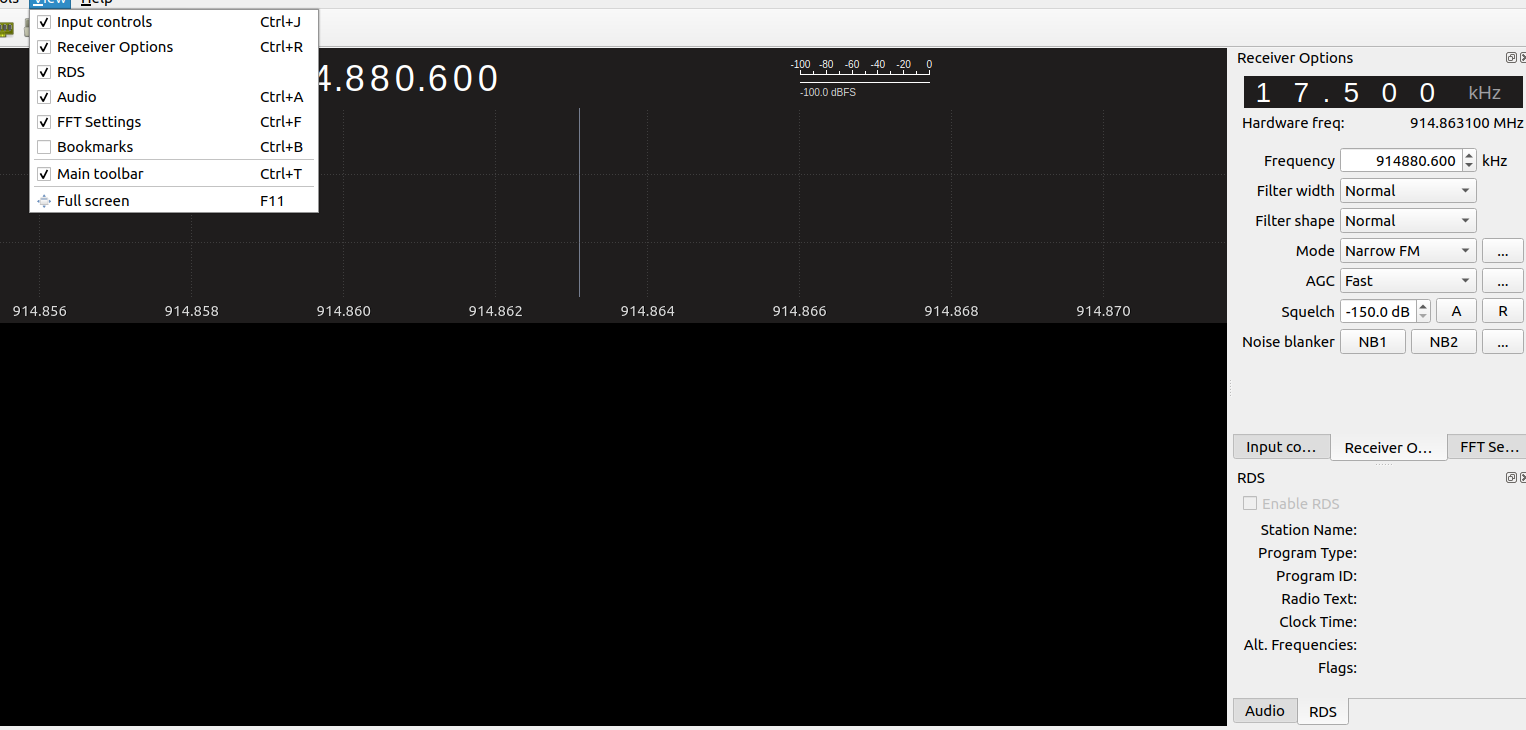

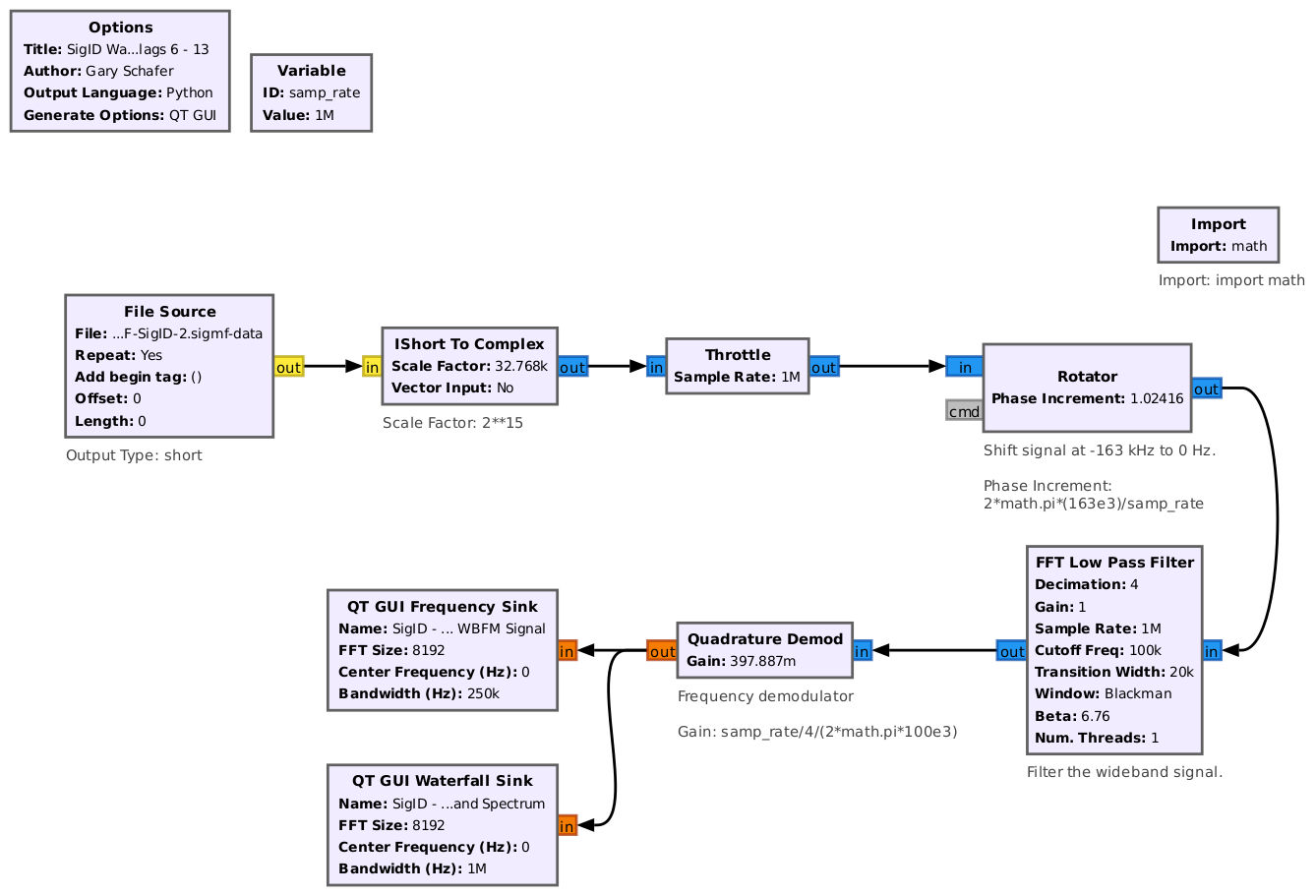

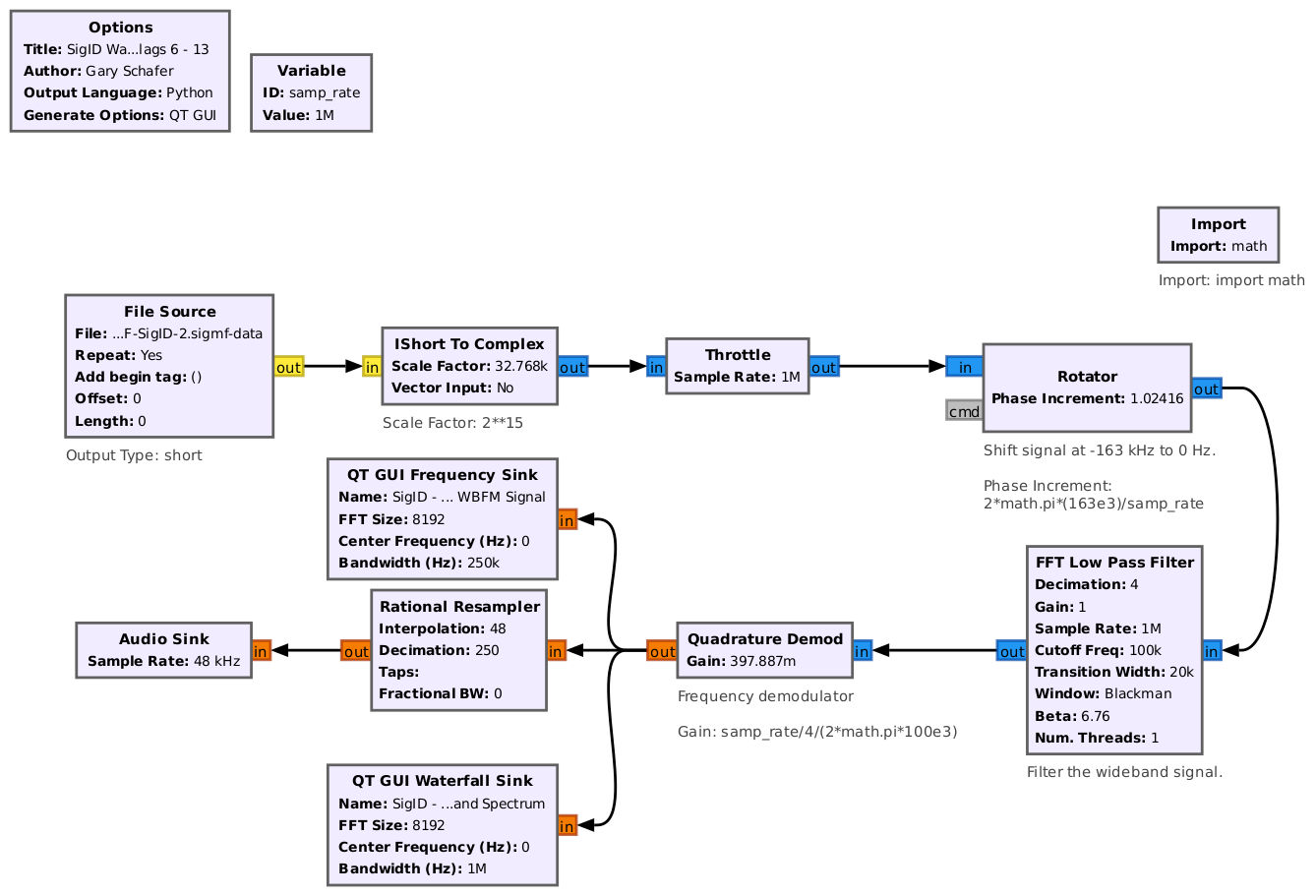

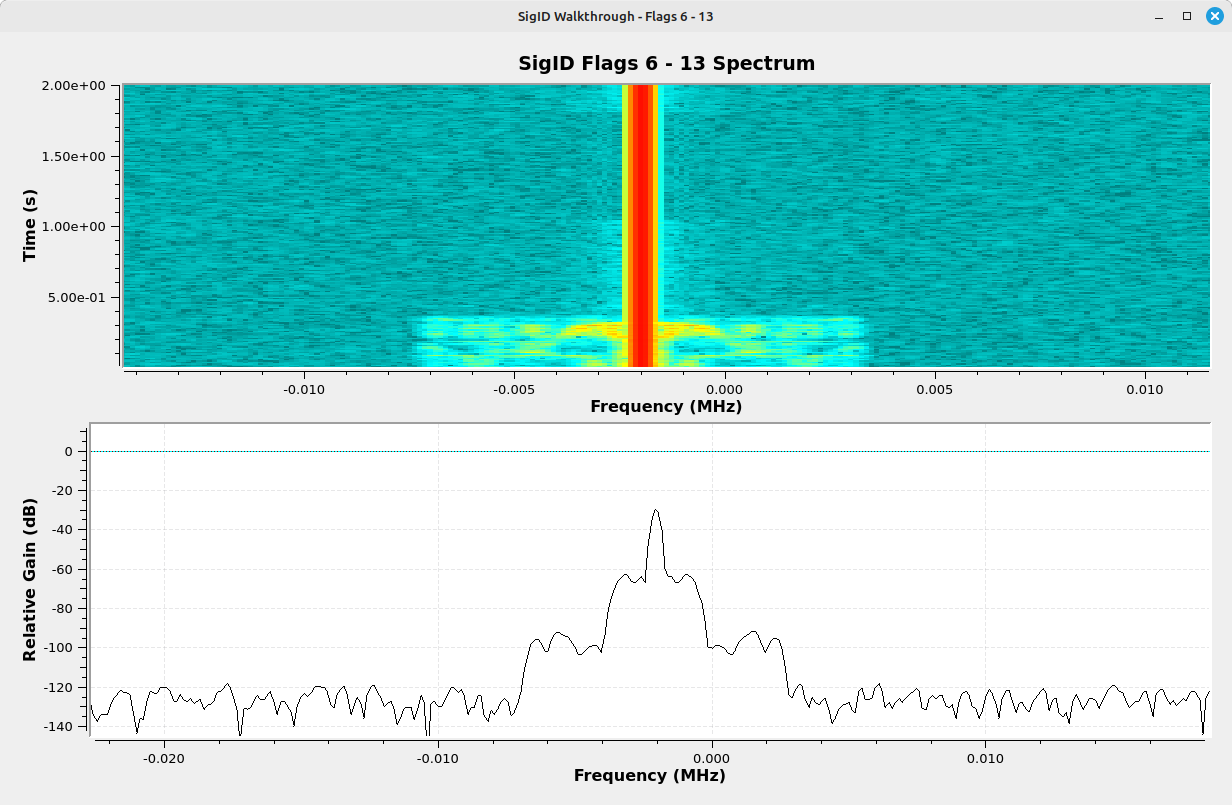

The next signal over, centered at roughly -163 kHz, is a higher bandwidth signal. A basic noise-noise bandwidth measurement shows it to be roughly 200 kHz wide. The spectrum shows a signal that appears to shift from side to side, indicative of frequency modulation. Further, filtering the signal and inputting it into a QT GUI Constellation Sink shows a clear ring, also indicative of frequency modulation.

As with AM signals, GQRX can readily demodulate the FM signal to baseband audio; it can also decode the RDS signal by turning on "View -> RDS" and selecting the "Enable RDS" from the RDS tab in the bottom right.

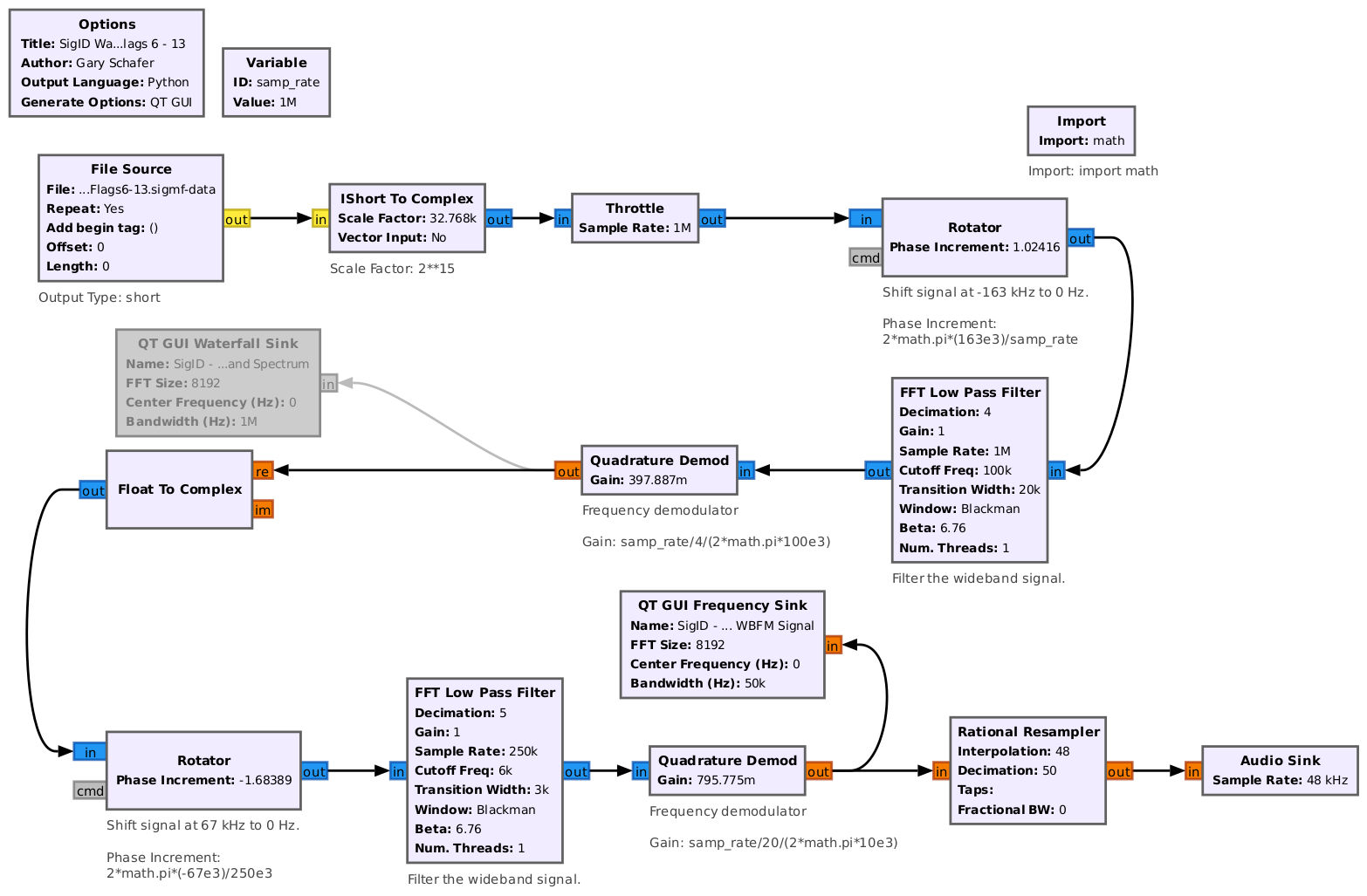

There are also several options for frequency demodulating this signal in GNURadio. These include the WBFM Receive, the WBFM Receive PLL, and the FM Demod. However, all of these tend to limit the amount of baseband spectrum available. Since we do not have an idea of what all the baseband spectrum will provide, we're going to use the Quadrature Demod block. This is just a basic frequency demodulator without anything else (as all of those other blocks provide).

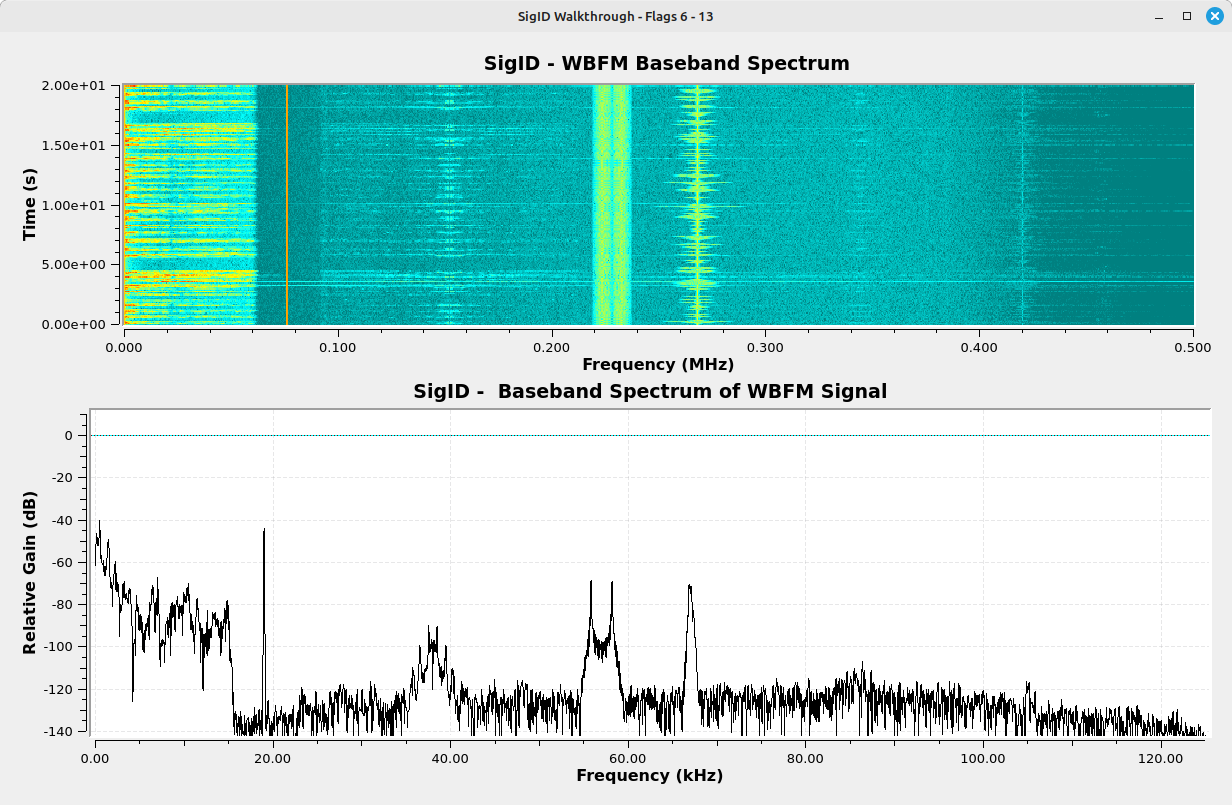

The output from this flowgraph shows a baseband spectrum with several signals.

Starting with the baseband audio (called the "L+R" audio, typically), we can use a Rational Resampler and Audio Sink to recover that audio. This provides Flag 6.

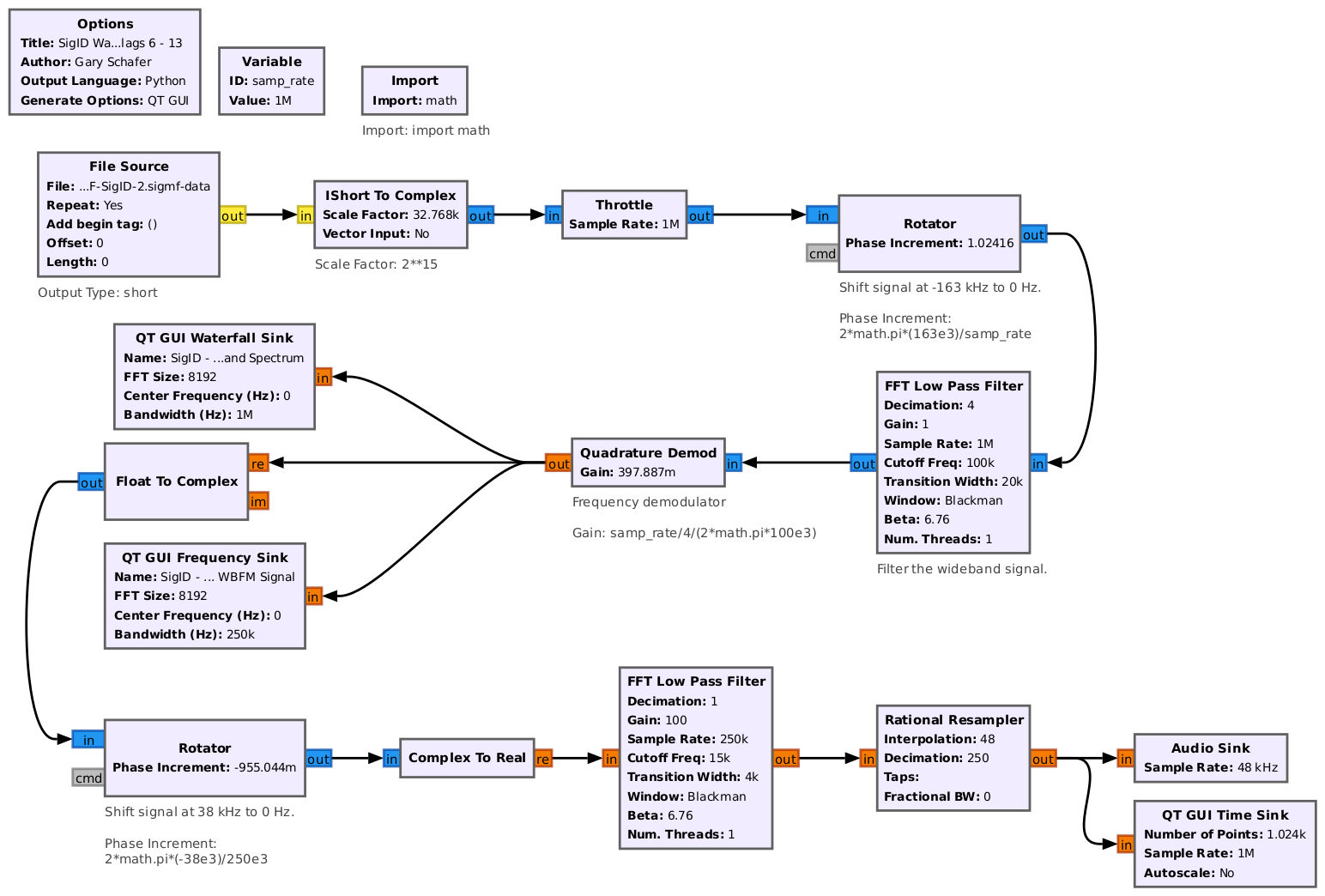

The next signal in the demodulated FM spectrum is the pilot tone. If you look at both the spectrum and spectrogram, you see that it does not vary in either amplitude or frequency. Hence, it can be ignored. The 38 kHz signal is typically called the "stereo" signal. Combined with the baseband, this signal provides individual left and right audio for stereo receivers. You can either create a flowgraph to use the pilot tone to demodulate this stereo signal (as is done in any stereo receiver), or you can just create a flowgraph to recover this signal without the pilot tone. That's what we'll do here.

Listening to this signal, you'll find that it has the exact, same information as the baseband (L+R) signal.

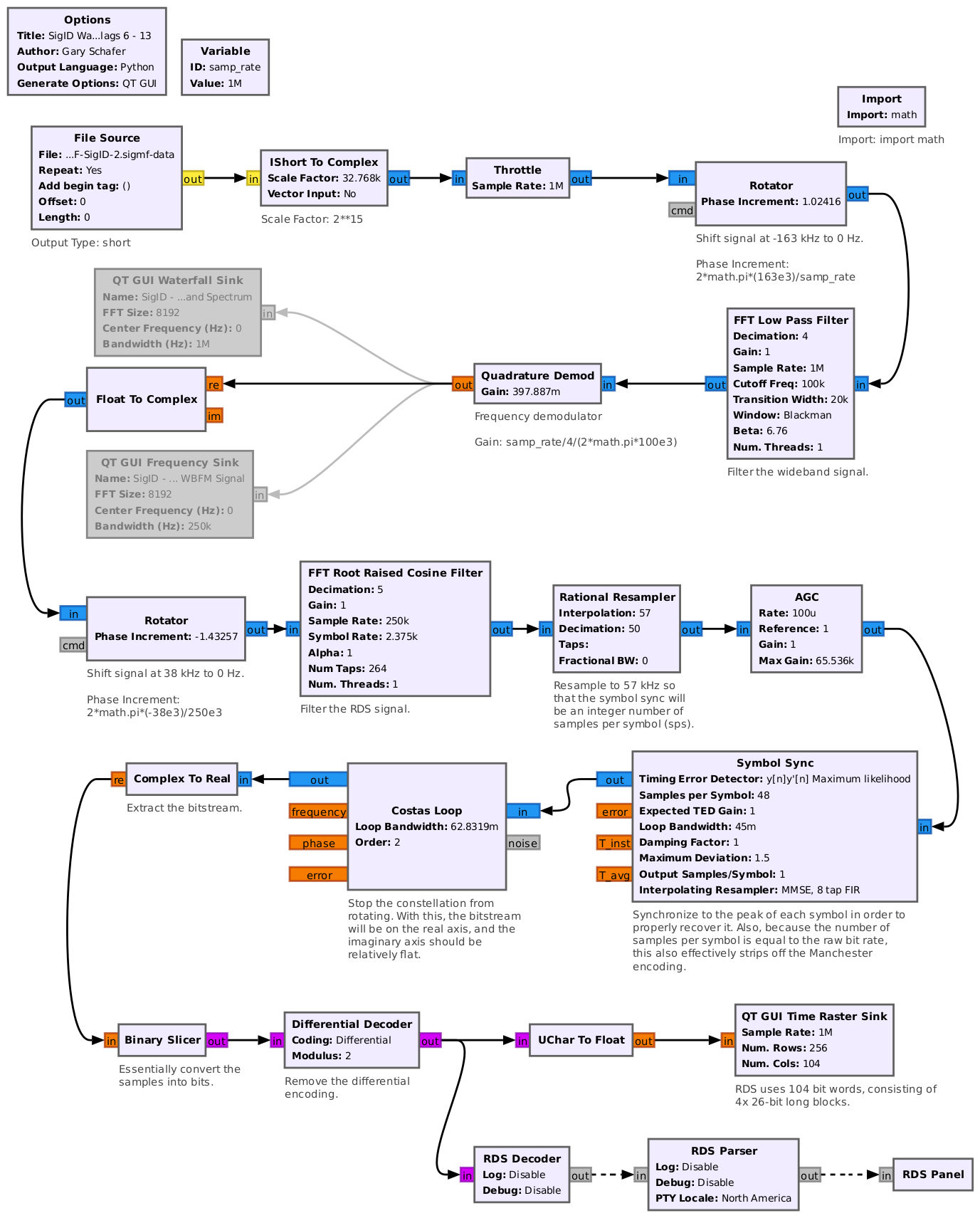

The next signal higher in the baseband spectrum is the Radio Data System (RDS), and is called the Radio Broadcast Data System (RBDS) in the US. It’s a low data rate digitally-modulated subcarrier. It has a raw bit rate of 1187.5 bits/sec. This is derived from a 57 kHz clock that is decimated by 48. It is both differentially then Manchester encoded. The Manchester encoding effectively doubles the symbol rate of the carrier (2375 Hz). The output symbols are filtered using a root raised cosine (RRC) filter, the modulated onto the carrier using binary phase shift keying (BPSK). A flowgraph to extract this signal essentially does all of this in reverse.2

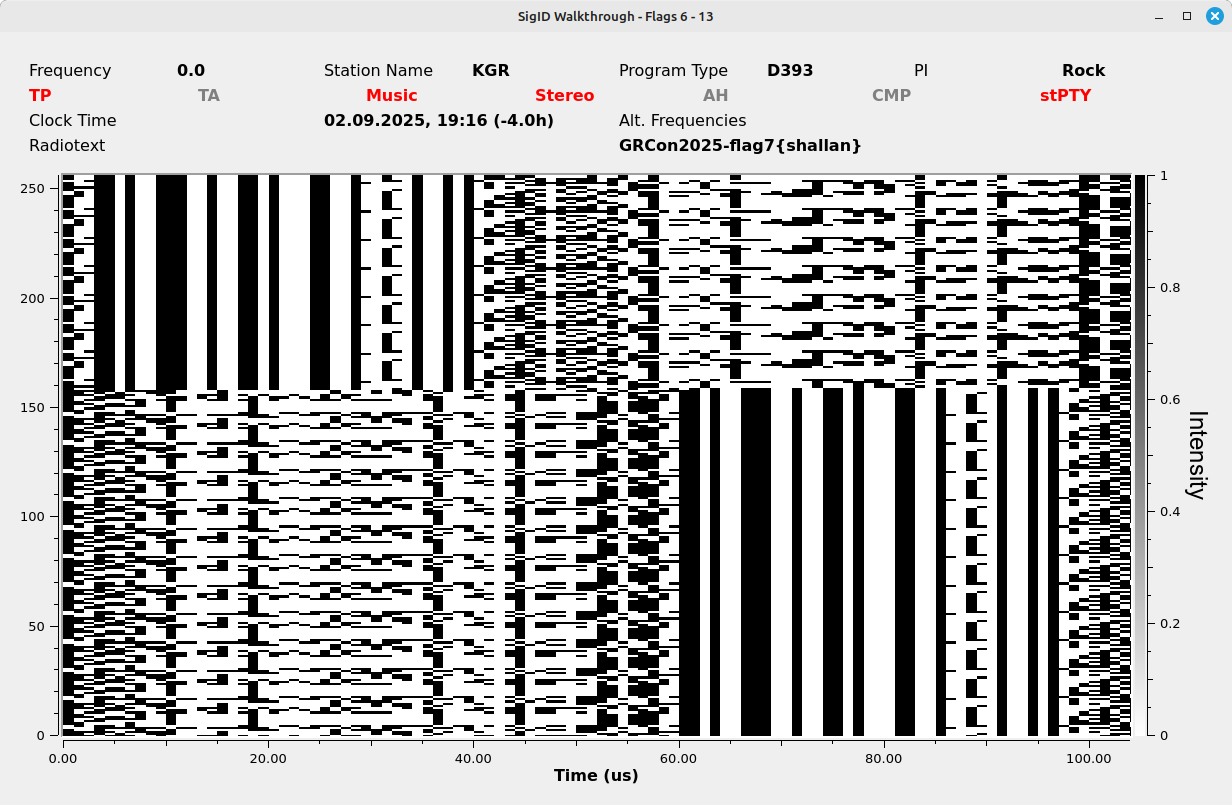

Running this flowgraph, we get the following bit raster, showing Flag 7.

The last subcarrier in the FM signal is centered at 67 kHz. It is a Subsidiary Communications Authority (SCA) signal, which is NBFM. Using the following flowgraph, we can recover the last flag on this signal.

This completes the WBFM signal.

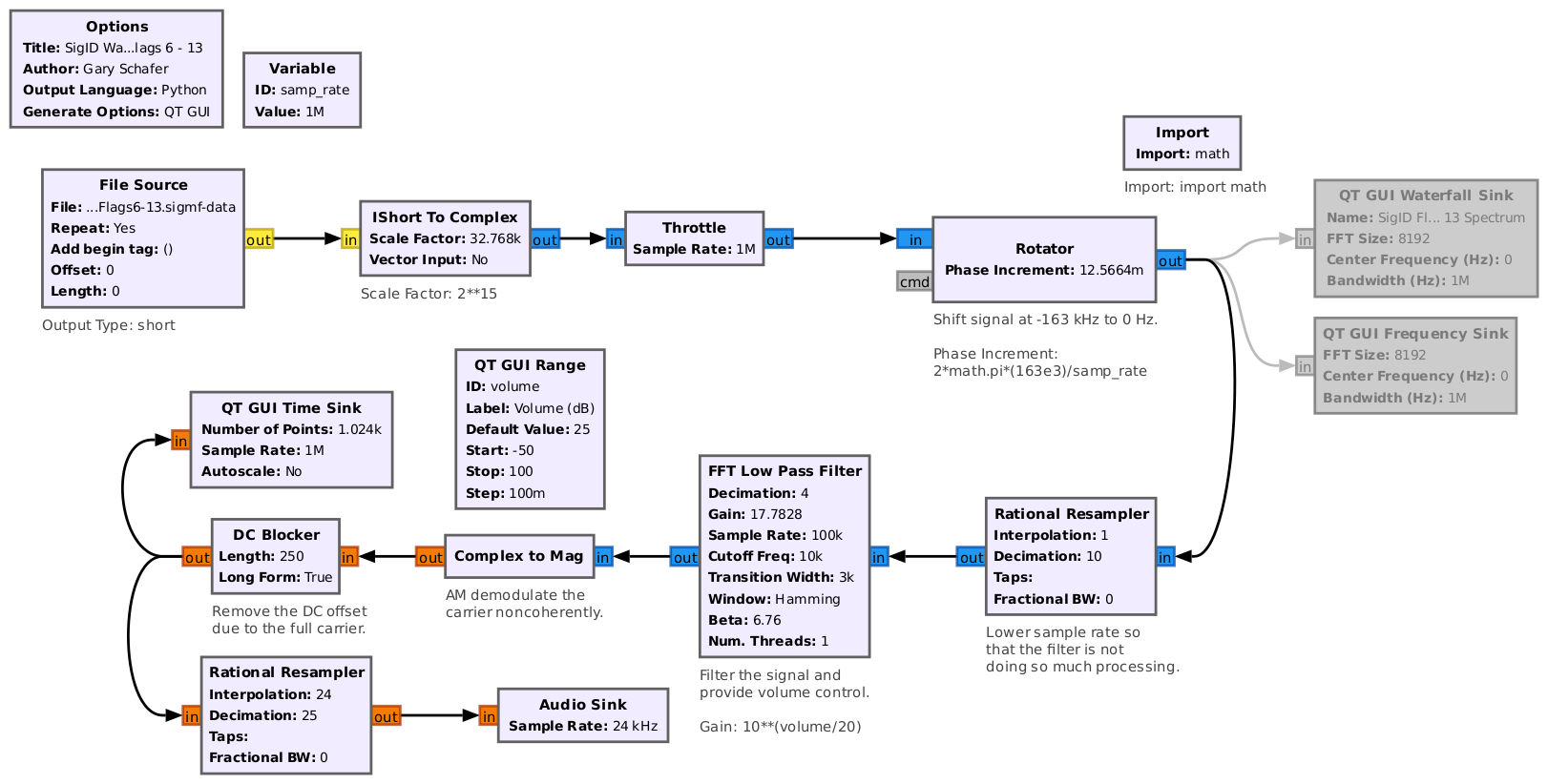

AM DSB-FC (Double Sideband-Full Carrier)

The next signal higher in the spectrum is centered at approximately -2 kHz. Looking at the spectral displays (spectral trace and spectrogram), you notice that the signal appears to have upper and lower sidebands, as well as a consistent amplitude spectral line in the center.

We could use GQRX's built-in AM demodulator to recover the audio, but using GNURadio is instructional.Because this is a full carrier (the consistent amplitude spectral line at the center of the signal), we can use a straightforward noncoherent demodulator on this signal (envelope detection). This is just a "Complex to Mag" block.

AM DSB-SC (Double Sideband Suppressed Carrier)

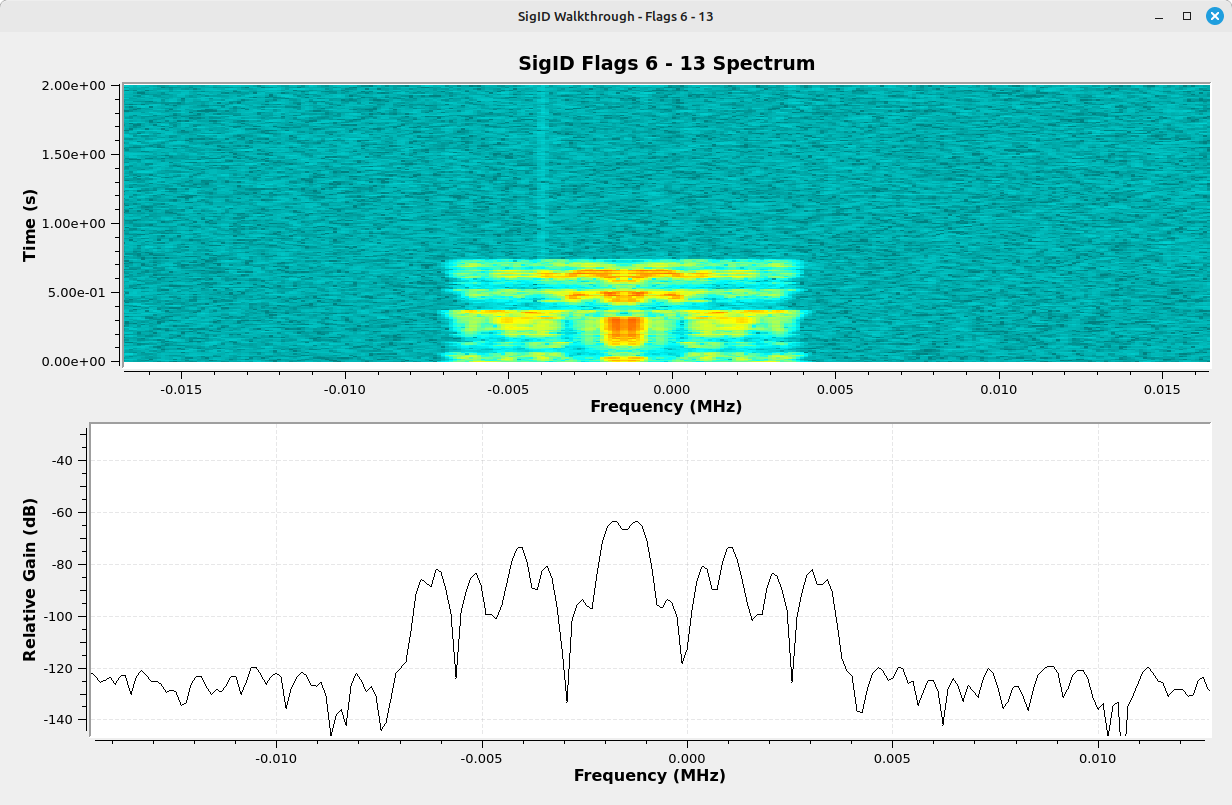

The next signal is centered at roughly 48.5 kHz and is shown below. The signal appears to have symmetry, with an upper and lower sideband. The sidebands appear to vary up / down, indicating AM. The mirror-imagery between the upper and lower sidebands indicate double sideband (DSB). However, there's no consistent spectral line, indicating suppressed carrier (SC). This is a AM-DSB-SC signal.

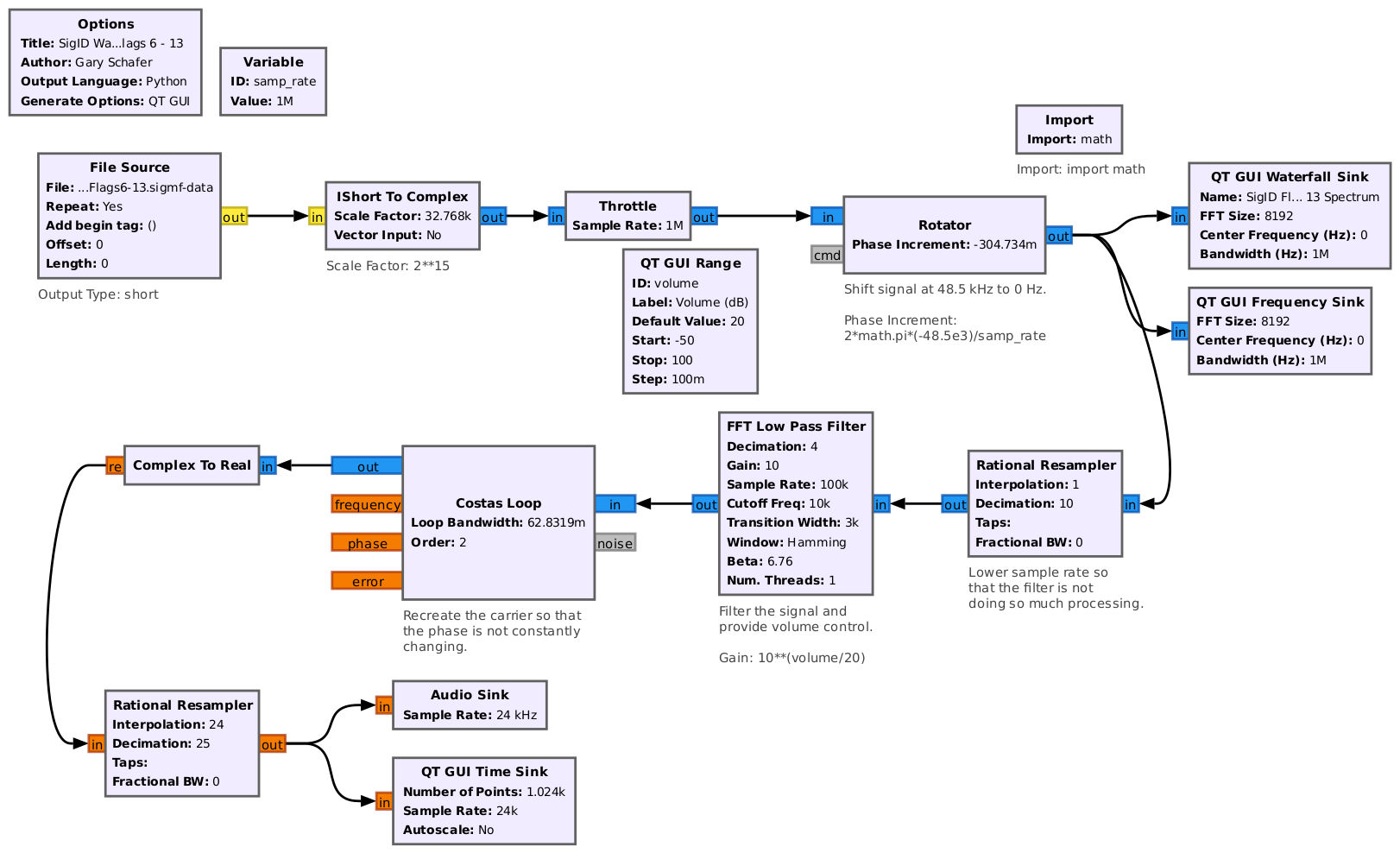

We could use GQRX's built-in AM demodulator to recover the audio, but using GNURadio is instructional. Due to the fact that this is a DSB-SC signal, we cannot use noncoherent methods ("Complex to Mag", or similar) to demodulate it. In this case, we'll use a "Costas Loop" block to sync to the phase of the signal for demodulation. The filtered signal is also amplified (the gain setting in the filter), and passed through the Costas Loop to synchronize to the phase of the signal. The sync will lock the signal phase to the real axis, so extracting the real signal from the complex ("Real to Complex" block) extracts the audio.

NBFM (Narrowband FM)

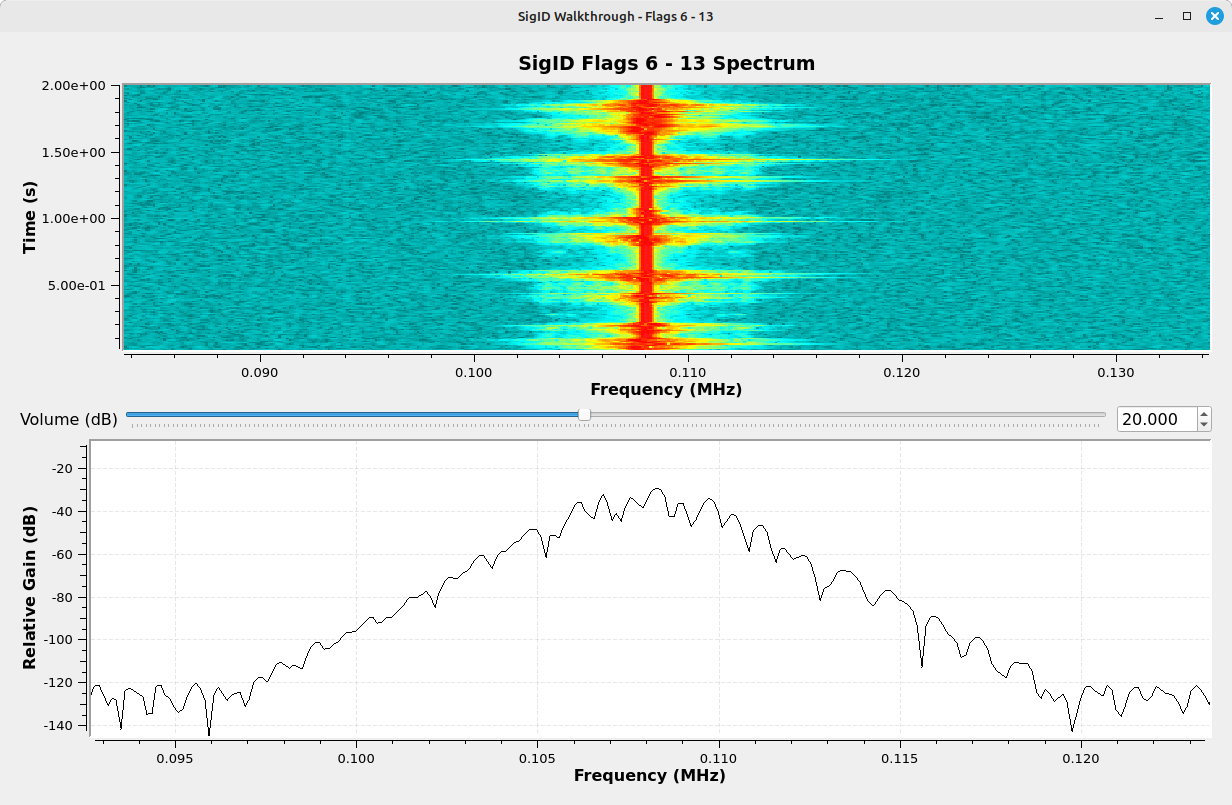

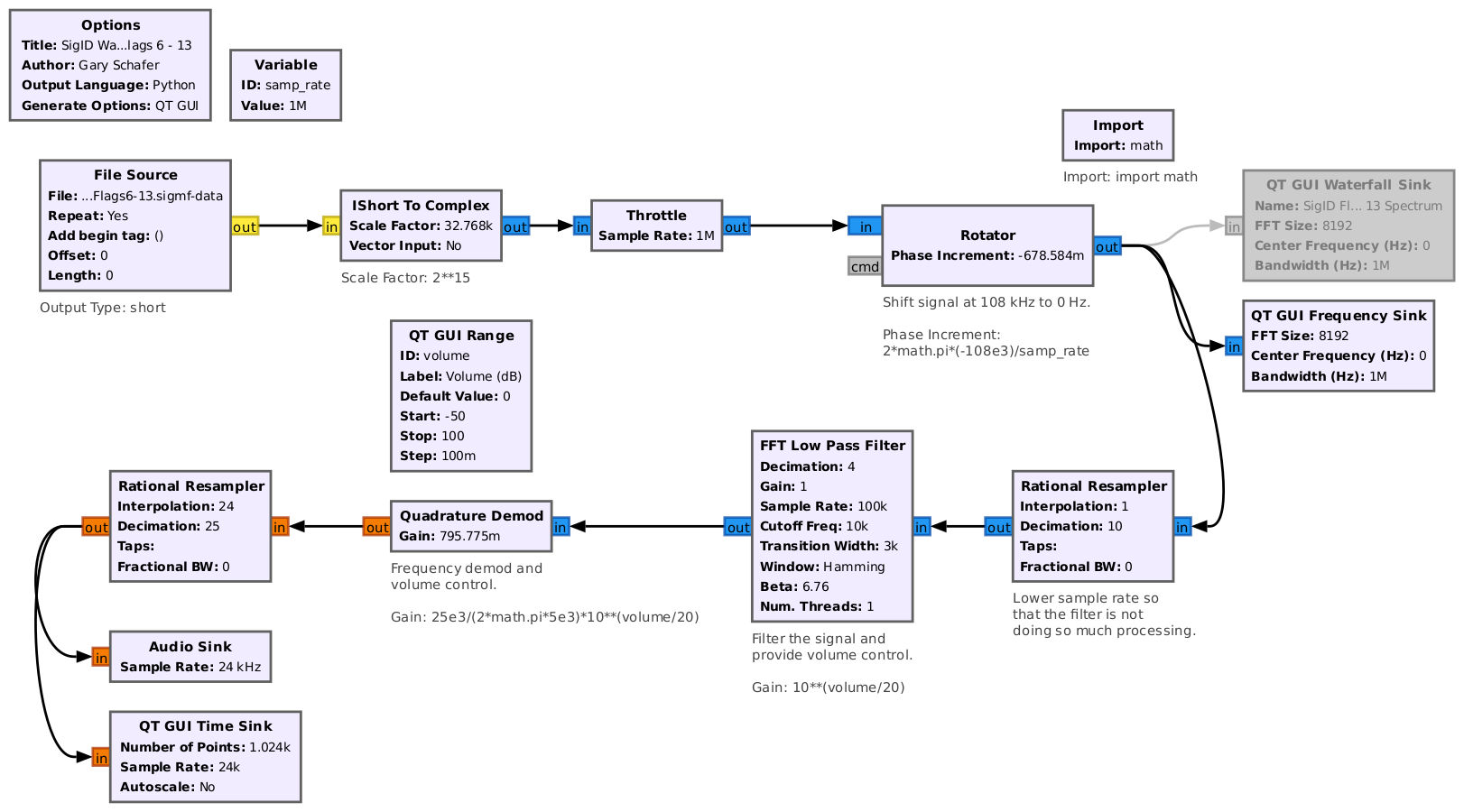

The next signal is centered at ~108 kHz. The signal, at first glance, appears similar to the AM-DSB-FC signal. However, when the signal reaches its peak, the spectrum is not symmetric, and the peak at the center disappears. This indicates FM, specifically narrowband FM (NBFM).

We can either use GQRX or GNURadio's NBFM Receive block or Quadrature Demod with a narrowband filter -- for the sake of granularity, we will use the latter. The result is a baseband audio reading the flag.

AM-SSB (LSB)

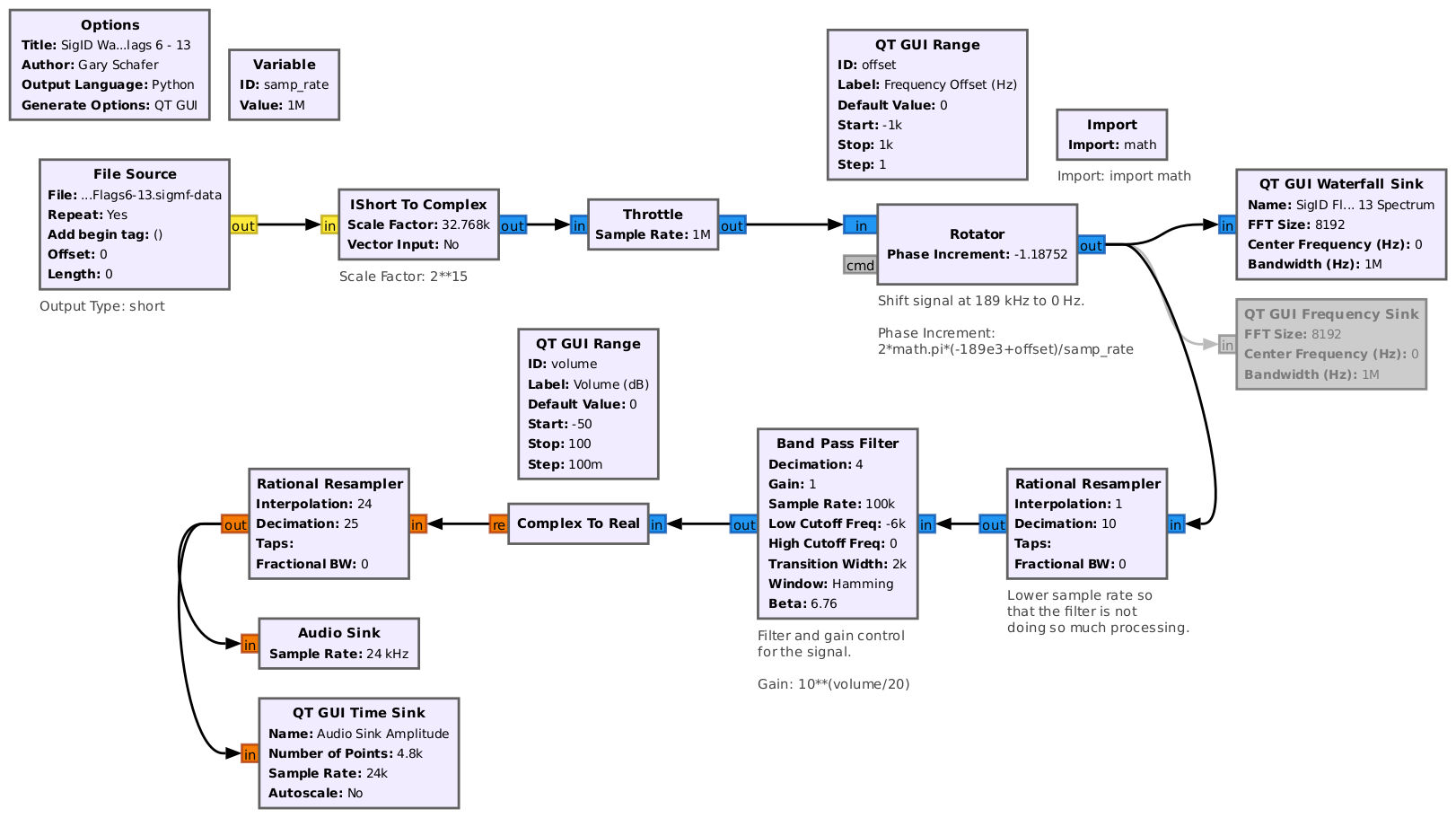

The last signal in this spectrum is centered at roughly 189 kHz:

The spectrum is similar to the lowest frequency signal in the spectrum, which was AM-USB-SC. The difference is that this signal appears to be a mirror image of that signal. That's because this signal is AM-LSB-SC. This can be seen from the fact that its spectrum does not appear to be symmetrical. The energy is stronger on the higher frequency portion of the signal, and the signal varies amplitude, indicating single sideband (SSB) AM, and specifically LSB-AM.

Again, we have options (GQRX, GNURadio), and we build the following GNURadio flowgraph, which produces baseband audio dictating the flag.

Signal Identification Final Comments

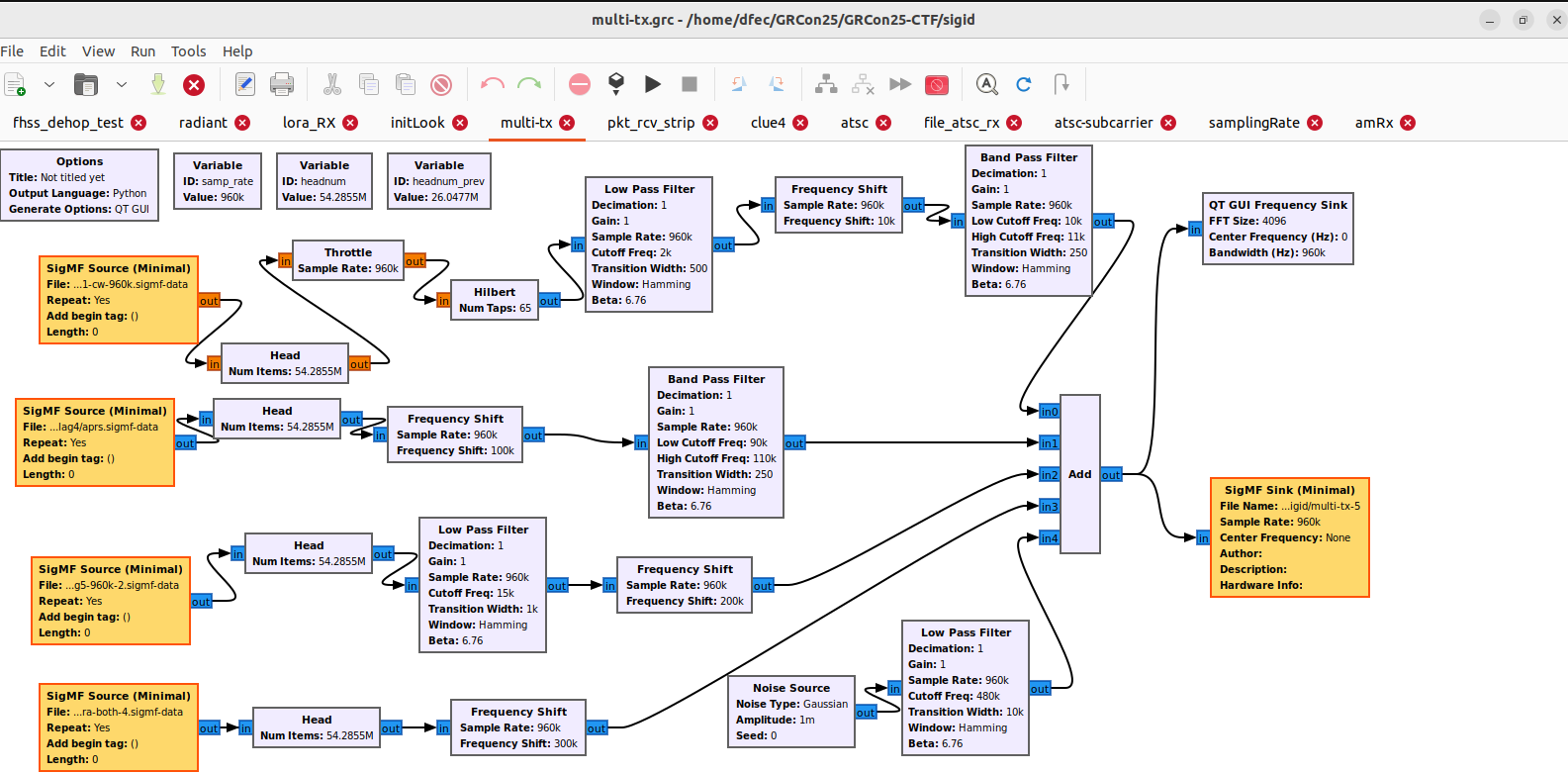

For many of these challenges, we combined a number of signals generated from separate flowgraphs or data sources. In this case, we generated individual [sigmf](https://sigmf.org/) files, then resampled and combined the files in GNURadio. For example, we may generate a NBFM signal at 48ksps, but would be required to upsample the file to 1Msps for the overall spectrum. This takes some finesse, as the individual files may contain different numbers of IQ samples -- the head block is set to allow the "longest" file to play. Additionally, we want to shift each signal to a unique portion of the spectrum. This is commonly referred to as Freqeuncy Division Multiplexing and we are using the "Frequency Shift" block (as opposed to the "Rotator" block) for this shift.

Radiant

This challenge was inspired by the first assignment I gave my students in their applied SDR class. It breaks a file (really, any file will work) into bytes, then bits, and FSK modulates those bits. I don’t include any error correction or noise, which should make the demodulation and decoding fairly straightforward, using the FSK tutorial on GNURadio’s wiki.

The first flag is the modulation type, which is 2FSK.

To find the other flags, we need to demodulate the signal and find the image referenced in the clue.

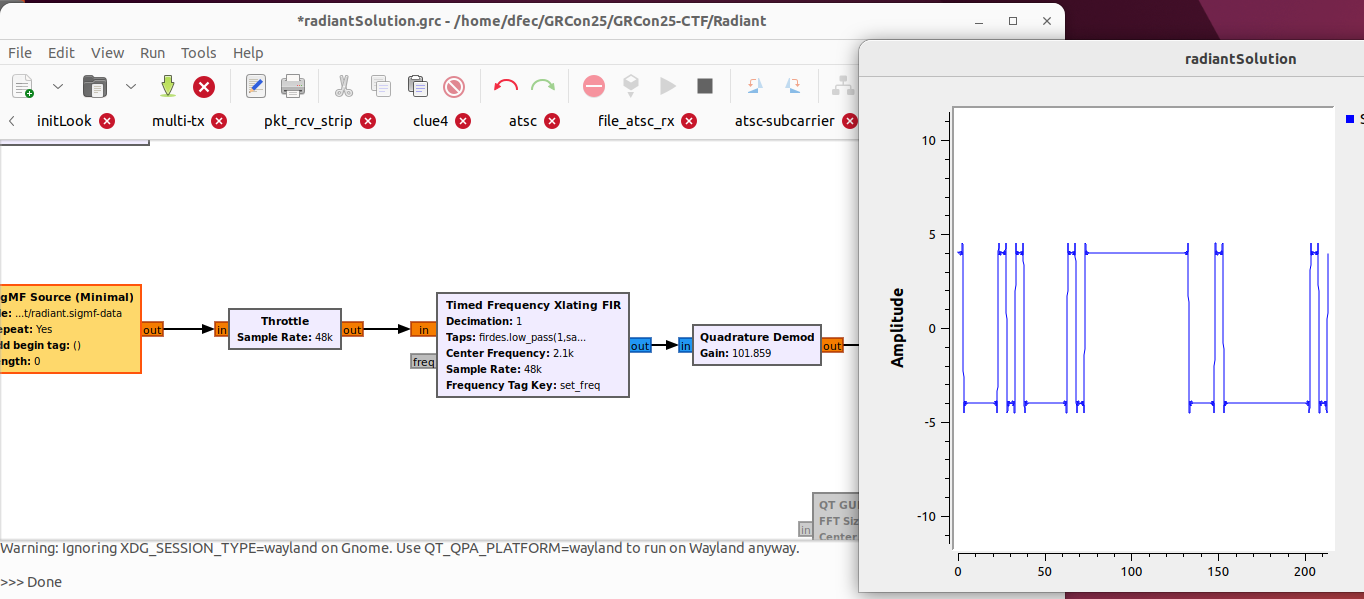

In our case, we need to determine the baud rate and samples per symbol. Using the FFT or the waterfall plot, we measure the two frequencies as 1800Hz and 2400Hz, making the frequency deviation 600Hz. Then, using a quadrature demod, we can measure the bitlength:

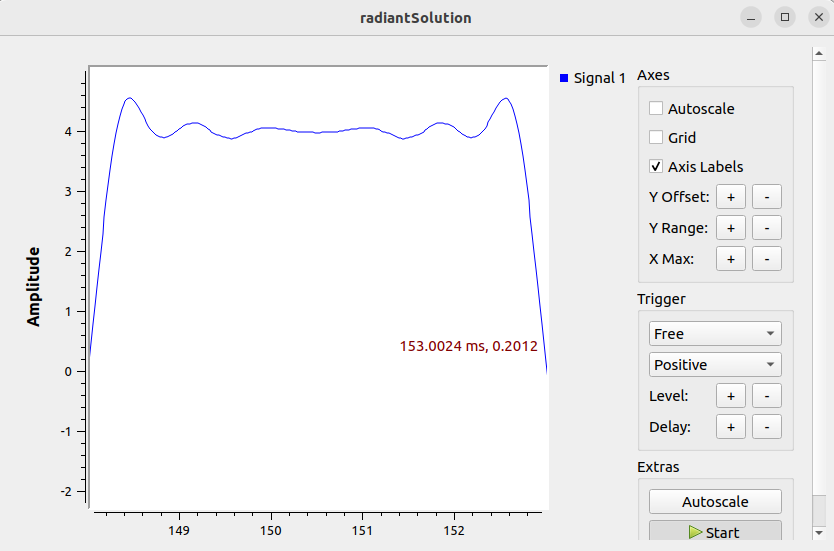

Zooming in on what appears to be a single bit, we can measure this as 5ms, which makes the baud rate 200bps. Given a sample rate of 48kHz, we calculate the samples per symbol at 240.

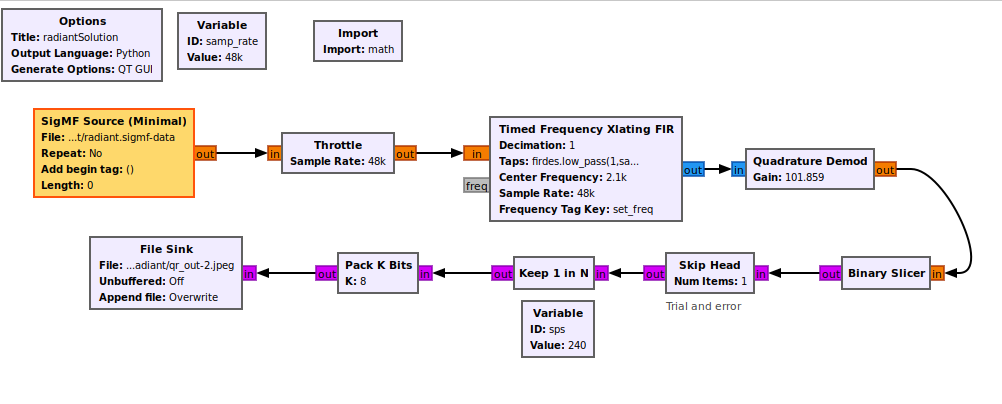

Finally, we use the binary slicer to shift the values to 0’s and 1’s, place a delay block to allow for bit errors at the beginning of the transmission, then decimate by 240 and pack the bits into bytes, and save the file into an image format. The final flowgraph is as follows:

The image is a QR code, which gives the second flag.

The other two flags are embedded in the file using steganography methods. Using steghide, you can find one of the flags. Inspecting the image data as text will give the other flag in plain text.

Supermurgatroid

Coming soon

Footnotes

-

The term “suppressed carrier” here is a misnomer. The carrier is not actually suppressed. There must be a carrier, otherwise, there would be nothing to modulate. What is meant here is that there is no unmodulated sinewave that acts as a reference with which we could noncoherently demodulate this signal. However, the term “suppressed carrier” has a long history, and who am I to argue with history? (steps down from soapbox) ↩︎

-

The standard states, “The subcarrier is amplitude-modulated by the shaped and biphase coded data signal (see 1.7). The subcarrier is suppressed. This method of modulation may alternatively be thought of as a form of two-phase phase-shift-keying (psk) with a phase deviation of ± 90°.” (Source: NRSC-4-A, United States RBDS Standard, Specification of the radio broadcast data system (RBDS), April, 2005). ↩︎